Elon Musk, Steve Wozniak, Yuval Noah Harari, Emad Mostaque, Andrew Yang, Yoshua Bengio, Stuart Russell, and John J Hopfield have all signed an open letter where they call for a six-month pause on any new AI advancements more powerful than GPT-4.

It opens:

AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research[1] and acknowledged by top AI labs.[2] As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.

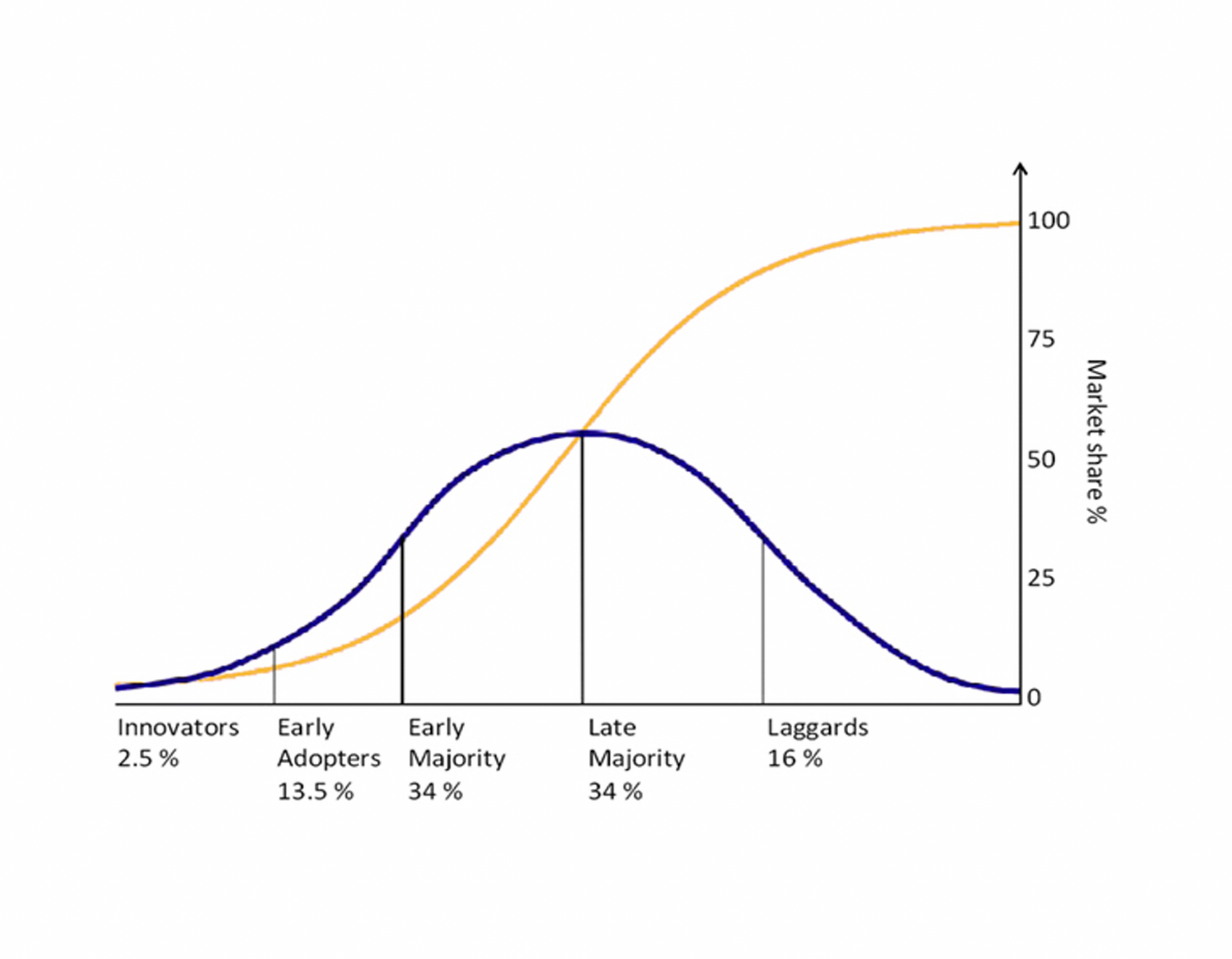

AI is already capable of doing incredible things for us, and society is just catching up. We need time to get across the adoption curve.

As new technologies are introduced and gain market share (yellow line), they move across social groups (blue line) from left to right: starting with innovators, then moving to early adopters and the majorities before finally reaching the laggards.

ChatGPT is now, famously, the fastest growing consumer application in history, having reportedly reached 100 million monthly active users in only two months (and as you’ve probably heard 100 million times by now, it took TikTok nine months and Instagram 2.5 years to grow that large). Will the rate of AI advancement be as fast as this adoption? That seems like it would be bad for humanity.

Contemporary AI systems are now becoming human-competitive at general tasks,[3] and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system's potential effects. OpenAI's recent statement regarding artificial general intelligence, states that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models." We agree. That point is now.

And here’s the big one:

Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.

At first, I bristled at this idea. What good is a six month pause going to be in the face of maximizing shareholder value and staying competitive in the market? Capitalism is a powerful force and these AI labs are all bound by the constraints of our economic system, which means they’re all incentivized to continue thinking really hard about research and development and rotating imaginary shapes.

The letter continues to make points similar to some that I’ve written about in the past:

AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts. These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt.[4] This does not mean a pause on AI development in general, merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.

AI research and development should be refocused on making today's powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal.

In parallel, AI developers must work with policymakers to dramatically accelerate development of robust AI governance systems. These should at a minimum include: new and capable regulatory authorities dedicated to AI; oversight and tracking of highly capable AI systems and large pools of computational capability; provenance and watermarking systems to help distinguish real from synthetic and to track model leaks; a robust auditing and certification ecosystem; liability for AI-caused harm; robust public funding for technical AI safety research; and well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.

A quick aside just to note that the founder the field of AGI says this pause isn’t good enough

Eliezer Yudkowsky, widely regarded as the inventor of the field of artificial general intelligence, didn’t sign this letter, and instead wrote his own TIME op-ed piece simply calling to shut down all research and development into artificial intelligence that are more intelligent than humans (AGI or artificial general intelligence which are as capable of any general task as we are). He literally says “shut it all down” four times (including the headline).

This is compelling. The AI that we have now are useful and practical and, still but less terrifyingly, no one actually knows how they really work. Let’s learn from Sarah Connor and stop any possible dystopias long before they start — or at least while they’re still manageable.

As incredible as I find today’s narrow AI like GPT-4, I find equal-and-opposite disillusionment at the concept of superintelligent AGI that are smarter than we are playing nicely with humans. Yudkowsky feels the same way:

The key issue is not “human-competitive” intelligence (as the open letter puts it); it’s what happens after AI gets to smarter-than-human intelligence. Key thresholds there may not be obvious, we definitely can’t calculate in advance what happens when, and it currently seems imaginable that a research lab would cross critical lines without noticing.

While he does say this six-month pause would be better than nothing, Yudkowsky also said the letter is “understating the seriousness of the situation and asking for too little to solve it” considering the massive ramifications of superintelligent machines.

On Feb. 7, Satya Nadella, CEO of Microsoft, publicly gloated that the new Bing would make Google “come out and show that they can dance.” “I want people to know that we made them dance,” he said.

This is not how the CEO of Microsoft talks in a sane world. It shows an overwhelming gap between how seriously we are taking the problem, and how seriously we needed to take the problem starting 30 years ago.

We are not going to bridge that gap in six months.

He makes a compelling point.

It seems fitting that Eliezer is pushing for stronger action in AI regulation, considering widely considered (even by ChatGPT) to be the inventor of perhaps the most-popular AI scenario, the “intelligence explosion” aka foom.

“Foom” is a term coined by AI researcher Eliezer Yudkowsky to describe a rapid, self-accelerating, and self-improving process by which an artificial intelligence (AI) system could achieve superintelligence in a very short period of time. The concept is based on the idea that once an AI system becomes capable of recursive self-improvement, it could lead to an intelligence explosion, causing the AI to become vastly more intelligent than humans almost overnight. This scenario raises concerns about the control and safety of such superintelligent AI systems, as their rapid development might outpace our ability to manage or understand them.

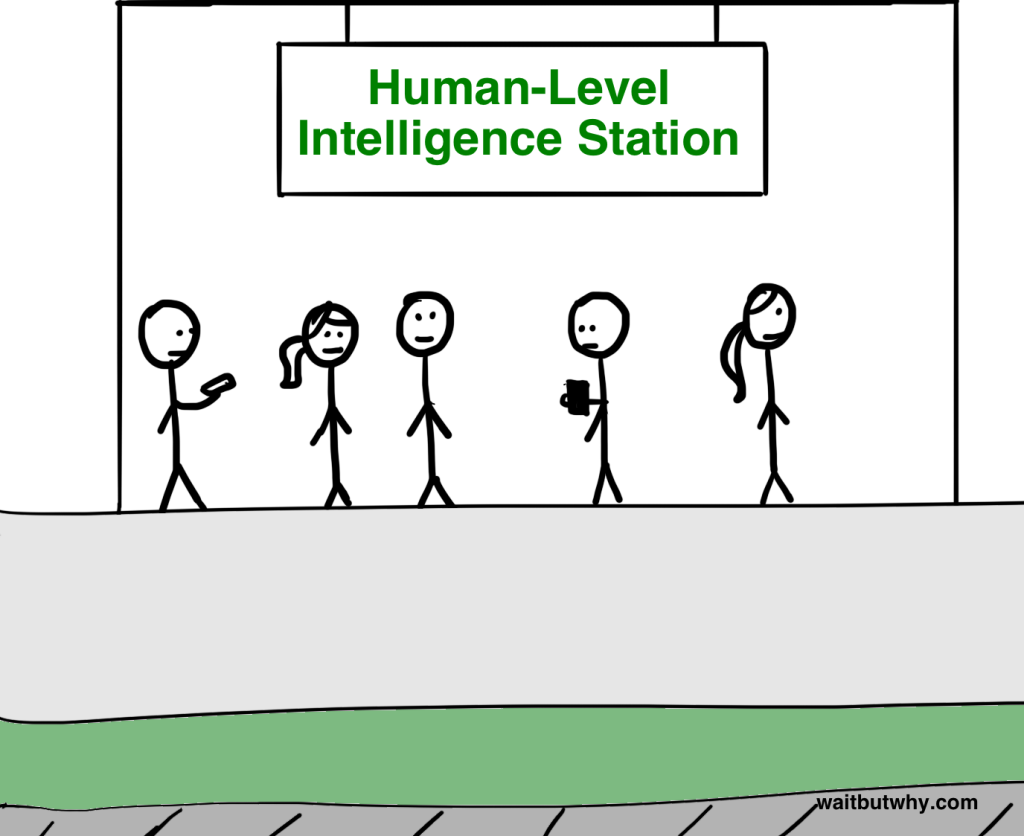

This is perhaps best visualized by the train station analogy used by both Nicholas Bostrom and coherently illustrated by Tim Urban in his must-read 2015 essay on the future of AI:

One might even argue that the noise the train makes when it flies by the station sounds like foom (image via Wait But Why)

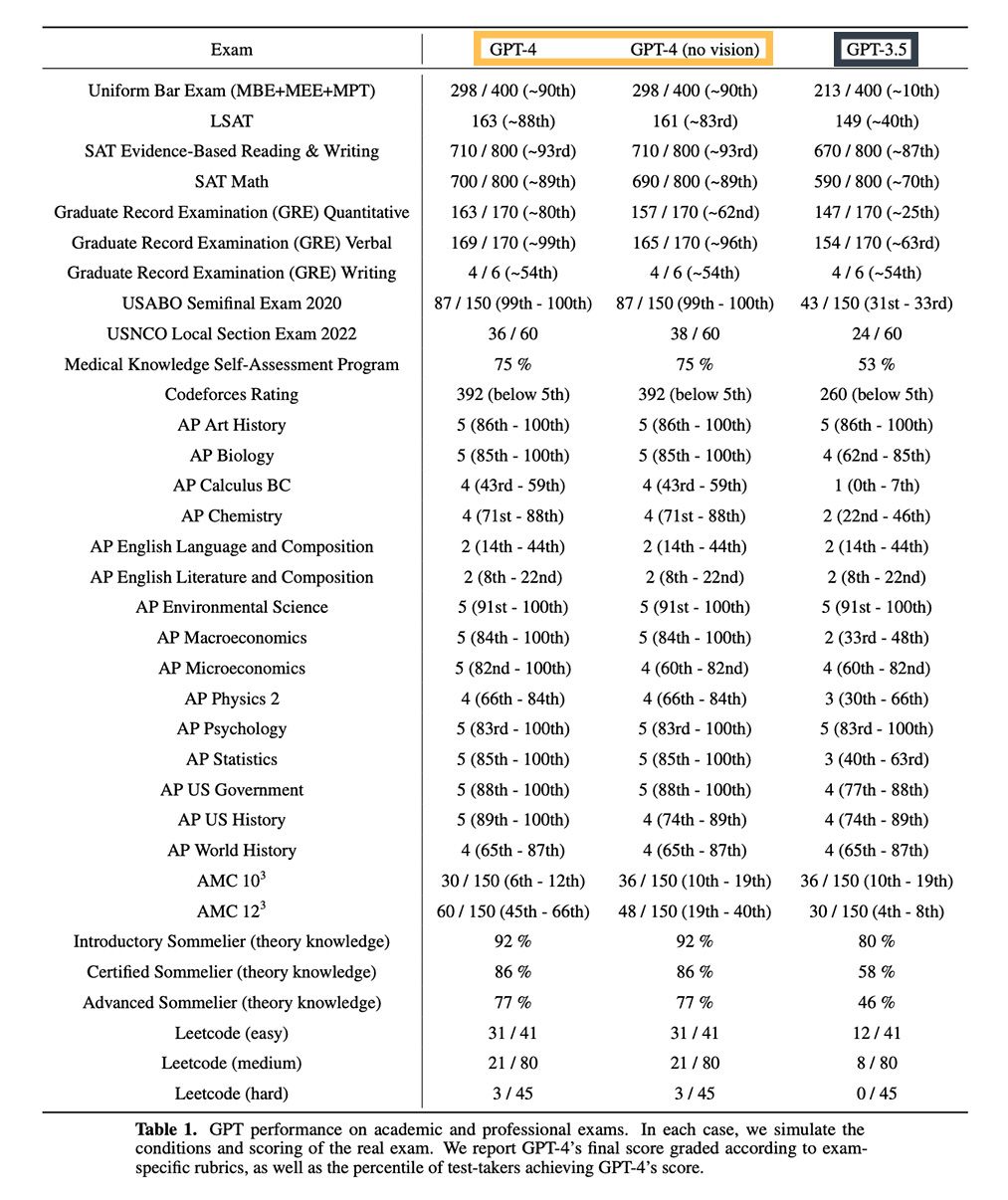

The AI train isn’t quite at that bullet-train speed yet. But GPT-4 is significantly more advanced than the OpenAI’s previous models, and it’s capable of passing some of the most demanding tests that measure the competency of humans, including the Bar exam and a combined 1400 out of 1600 on the SAT.

GPT-4 has a hard time with more advanced English and Lit classes, but the improvement in scores compared to the GPT-3.5 model that took the world by storm is right there to see.

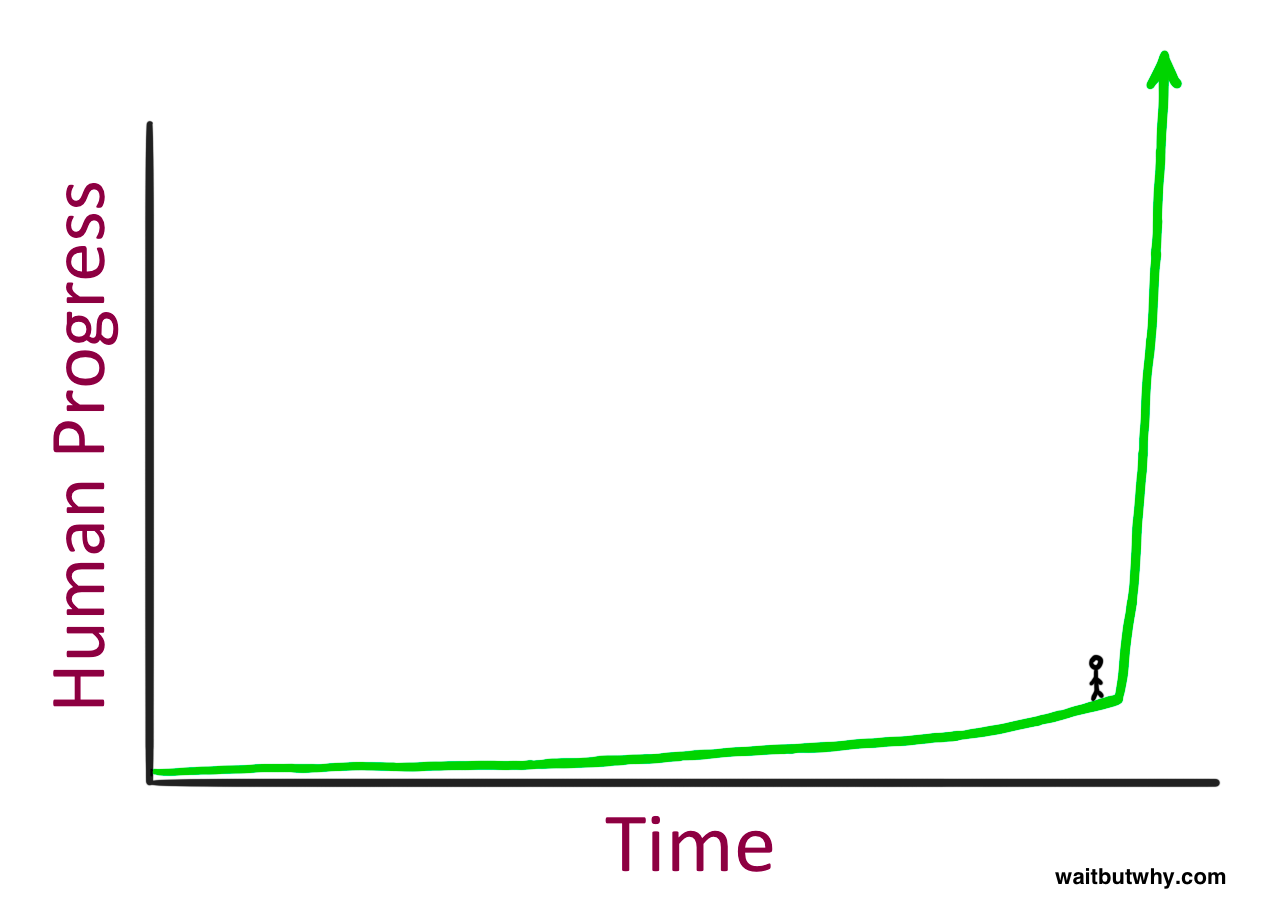

Once the intelligence explosion starts, it’ll only get faster and faster. Tim Urban’s charts are helpful to visualize this unimaginable idea:

We aren’t really prepared for this kind of intelligence explosion, and lots of very smart and well-informed people are agreeing that we need to pause before we jump into this, most notably Yudkowsky, who again, is considered the founder of this very field we’re talking about.

We literally cannot imagine a being this much more intelligent than us. This isn’t comparing a bumbling beer-sipping Homer Simpson to the genius billionaire playboy Tony Stark; this is imagining an entity that would be akin to a god, like a human stepping on an anthill, or paving over their entire colony to build a highway without a first or second thought. Our entire species is at risk.

It’s easy to imagine AI tools that already exist taking entry-level roles away from humans. Terrifyingly, this seems poised to impact younger people first. How is a college-grad supposed to “gain experience” when the AI have taken over all the entry level jobs?

We need more time to figure things out, and AI labs who promise to be ethical and good and do the right thing should listen.

The open letter concludes:

Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an "AI summer" in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt. Society has hit pause on other technologies with potentially catastrophic effects on society.[5] We can do so here. Let's enjoy a long AI summer, not rush unprepared into a fall.

Here’s my take — let’s have a summer for humanity. But we should also leave things where we are. This is extremely uncharacteristic for me to say, but this is the time where growth is no longer necessary, at least not until we figure out some big things. This is about all of us.