You have to give flowers to CNET for taking a chance on something new.

In the unending race to stay ahead of the curve, editors at the 27-year old tech website decided to experiment with posting articles generated using an undisclosed “AI engine” back in fall 2022.

It’s only been a few months and the experiment is... not going well.

CNET is one of the earliest websites to cover tech news and reviews, founded back in the early 90’s. I haven’t been paying close attention to all the changes happening at CNET recently, but still, it’s easy to see where they were coming from with this whole thing.

Using AI to generate straight-forward articles makes sense: these were entry-level explainers, probably intended to get search traffic from people looking for quick answers on entry-level concepts. Nothing high-stakes.

In theory, this sounds like perfect fodder for generative AI. These stories don’t need to be deeply reported and only require relatively rudimental research. And not making a big announcement (they only had tiny disclosures in the articles) is a way to test things out before the project gets put under the public spotlight. Entry-level writers might be tasked with editing the AI output for factual and stylistic consistency. Seems like an easy slam-dunk. It was not.

There were caveats that these articles were “written” with AI, but the simple fact CNET was doing this without a more formal announcement quickly raised the expected ethical questions. Skeptics said it would eliminate work for entry level writers: how is an up-and-coming tech writer supposed to build up their chops if they can’t write quick hit posts like this?

CNET finally spoke out this week, on 16 January 2023, with a lofty article about how they are doing this in an effort to “separate hype from reality.”

But not long after that, CNET entirely paused publishing any new articles with AI.

According to The Verge, Connie Guglielmo (the editor-in-chief of CNET) held a call today, 20 Jan 2023, where she said, “we didn’t do it in secret, we did it quietly” while announcing the pause.

The Verge reported that this was due to the disclosure controversy, but if you ask me, the bigger issue is that at least three AI generated articles published by CNET were wrong about basic facts.

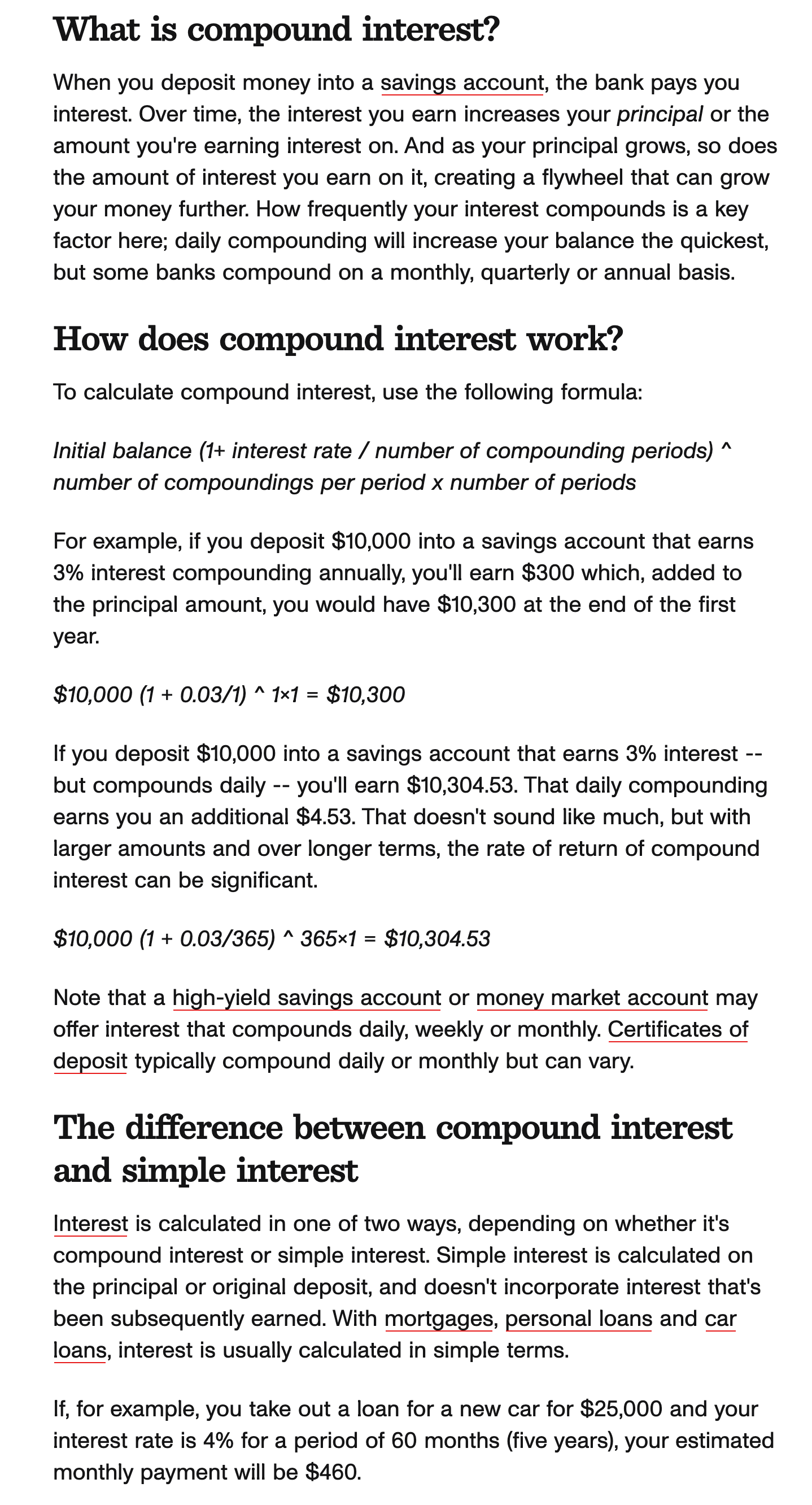

In reference to the above screenshot about compound interest, Futurism observed:

Take this section in the article, which is a basic explainer about compound interest (emphasis ours):

"To calculate compound interest, use the following formula:

Initial balance (1+ interest rate / number of compounding periods) ^ number of compoundings per period x number of periods

For example, if you deposit $10,000 into a savings account that earns 3% interest compounding annually, you'll earn $10,300 at the end of the first year."

It sounds authoritative, but it's wrong. In reality, of course, the person the AI is describing would earn only $300 over the first year. It's true that the total value of their principal plus their interest would total $10,300, but that's very different from earnings — the principal is money that the investor had already accumulated prior to putting it in an interest-bearing account.

"It is simply not correct, or common practice, to say that you have 'earned' both the principal sum and the interest," Michael Dowling, an associate dean and professor of finance at Dublin College University Business School, told us of the AI-generated article.

This is exactly the kind of blatant mistake an editor is supposed to catch. Here’s the most damning part, from that same Futurism article:

It's a dumb error, and one that many financially literate people would have the common sense not to take at face value. But then again, the article is written at a level so basic that it would only really be of interest to those with extremely low information about personal finance in the first place, so it seems to run the risk of providing wildly unrealistic expectations — claiming you could earn $10,300 in a year on a $10,000 investment — to the exact readers who don't know enough to be skeptical.

The other two CNET AI articles, one of which involved a description of car loan payments and the other an explanation of how certificates of deposit compound, also contained basic factual errors in describing fundamental concepts. It looks like these mistakes are fixed now, but how did this happen in the first place?

Journalists talk about ethics all the time: how they’re the fourth branch of the government, how they have a responsibility to provide access to information, to carry the banners of free speech. Any real journalist, even self-proclaimed ones, has an ethics policy (I have a brief one on my About page). This is a clear mistake, but who really made the mistake? AI or humans?

If you ask me, this feels like a bigger L for CNET’s editors than it does for generative AI.

This is literally why we have editors

We know large language models like ChatGPT are prone to providing confident answers that are completely wrong. But human writers are the same way! That’s why editors exist.

I looked through some of the articles CNET published with their “AI assist”. They say human editors reviewed the content for accuracy and clarity, but after spending a lot of time reading text generated by ChatGPT, when I skim through these articles, huge chunks of these articles scream that they were written by AI.

Editors aren’t supposed to just tweak facts; they’re also supposed to edit voice and style. Once they get the facts right, it’s a journalist’s job to tell a story in a compelling way. Make compound interest interesting, somehow. They do not accomplish that mission here. Let’s go beyond the obvious factual mistakes and take a minute to talk about how these words actually read.

The below is from CNET’s aforementioned article explaining compound interest, where they initially messed up the total number in the part where they talk about earning $300 on a $10,000 principal.

It’s about compound interest so it’s boring, yes. But the thing that most stands out to me is how the article jumps right in to each paragraph. Without segues or intros, the flow of each word in every sentence feels stiff... one might even call it robotic. There are some parts here and there where a person seemingly added a comma, but overall, this is very sterile writing, even for the topics discussed.

Particularly the way it just dives right in with a weird formula, “To calculate compound interest” blah blah blah, sounds like an AI. Human writers would feel compelled to use a more superfluous intro or provide some sort of perspective in an attempt to make things less banal. This is just a bland summary, like a plain bagel with no toppings.

If the factual errors weren’t enough, even for a boring explainer on compound interest, the style of CNET’s AI articles suck, too. It feels weird to even call it style.

It is going to fall upon humans to come up with more creative and effective solutions for writing with the assistance of AI. I have a few obvious solutions.

Obvious solutions

Obvious solution #1: be conservative and don’t use AI to generate stories. Keep paying humans, give entry-level writers a path to gaining experience, and keep the AI haters happy. This is probably the least progressive move.

Obvious solution #2: be better and don’t publish articles that have clear factual errors. The job of a good editor is to catch factual and stylistic mistakes, and then work with a writer to fix them. Depending on what AI model CNET used to generate these articles, their editors might not be able to tweak outputs in the same way they could provide feedback to human writers, who are capable of learning and gaining new skills through experience. But whatever developer trained the model should be available to help tweak it. Regardless, if you can’t figure out how to do this then it raises far more important journalistic questions than it does anything about AI language models.

Less obvious solution #3: invent a way to denote content that was generated by AI compared to content created by a human. I always read through any AI generated content I publish here on Gold’s Guide, but I also use a monospace code font to make it clear when any significant amount of text was generated by an AI. You can see this in articles like my news hit when ChatGPT was first released. This was not hard for me to implement: every website publishing tool has options for code fonts: it’s baked into the HTML spec that the entire internet runs on in the same way as bold and italic font variants are.

If the emergence of AI is the start of the next tech epoch, the first media publications that figure out how to properly balance the speed and verbosity of AI with the precision and accuracy of good journalists will be poised to disrupt their competition. It’s fun to imagine that a storied tech publication like CNET might be the first to do it, but this whole debacle should serve as a shining example of how not to use AI for journalism.