The latest meme I’ve found myself laughing at time and time again has also left me, time and time again, wondering how the hell people were making them.

Call it the frequency illusion or chalk it up to The Algorithm learning my interests, but ever since I came across the shockingly amazing Midjourney images of Jodorowsky’s Tron (a movie which was never made) I’ve been seeing more and more of these kinds of slideshows around the internet.

When I first watched Harry Potter by Balenciaga, though, it was like the dawn of a new era — the sunken cheeks, hyper-stylized hair, wistfully-piercing gazes — these videos are uncanny and hilarious. The blinking, head movement, and lip-synced dialog take these to a new level.

The creator of these really knows what they’re doing — the results are fantastic. As of this writing, the original Harry Potter by Balenciaga video has over 5.6 million views on YouTube.

So I made one myself — with characters from the cult-classic horror film American Psycho.

(click here to jump ahead to read about how I did it)

What’s going on here?

AI memes reached the mainstream late March 2023, when incredibly realistic images of the Pope Francis wearing an insanely gigantic white puffer coat, looking like he just stepped off the runway, started spreading around the internet. That was a fun sentence to write.

The Pope has always dressed with style, but since when does he have swagger? These images fooled a lot of people.

It was like The Dress, a moment where people had trouble believing their senses. No one knew what to believe.

at this point I assume any unusual photos like this were generated by AI https://t.co/RfWSnEkdkL

— Tyler Gold (@tylergold) March 30, 2023

Yeah, it’s generated by AI (zoom in on his right hand)

Midjourney v5 had just been released a few days before, and it seems quite apparent that these next-level convincing images are using the new model, which is currently only available to a small group of early testers.

As this was all happening, my YouTube feed was simultaneously becoming inundated with a less confusing-to-the-senses but still shockingly compelling videos that imagine various characters from the world’s most beloved fictional universes... as if they were in high-fashion Balenciaga photoshoots.

The videos I find myself watching all have one thing in common: they combine multiple AI tools and remix multiple worlds together to tell a story. A remix on multiple dimensions.

Everything is a remix

What once started with simple Advice Animals (images with funny captions in all-caps Impact) quickly gave way to inside-the-internet jokes like the infamous “Damn, Daniel” — and then thousands upon thousands of unimaginable variations.

The idea that everything is a remix shouldn’t come as a surprise to anyone who has been on the internet — and it’s an idea that is only becoming more relevant in a world where generative AI can magically conjure content.

I’ve seen AI memes uncannily portray the voices of US Presidents playing elaborate games of Call of Duty, debating the best Halo game, and just about anything else you’d care to imagine, spreading spread across public feeds and private group chats alike.

When I first came across this style of video, things were a technically simpler, if not conceptually interesting (I really liked the re-imagined versions of Star Wars).

These images were really cool, but they were also stills. The Harry Potter memes were, fittingly, the first ones I saw which applied the incredible animations to the faces: they blink, nod their heads, and even lip sync dialog.

This adds a shocking level of realism — but of course things are still noticeably rough around the edges, at least as of this writing, especially compared to the swagged-out Pope. For now, camera flashes are a useful way to cover imperfections up in these photoshoot-style videos.

The Harry Potter videos are just the tip of the iceberg: there are videos imagining Game of Thrones, Family Guy, Pulp Fiction, The Office, Twilight (fitting), Breaking Bad, and even Disney princesses as if they were in Balenciaga photoshoots. Other notable additions include Harry Potter by Adidas (this one goes hard), Marvel characters by Gucci, and perhaps most incredibly of all, DC characters by Barbie.

Here’s a YouTube playlist of my favorites.

How are these AI Balenciaga meme videos made?

This is all made possible by a combination of generative AI tools: ChatGPT to write prompts that Midjourney can turn into images; creating custom voices in Eleven Labs to synthesize dialog; animating it all in D-ID (this is what takes things to the next level); finding licensable music; and then compiling it all in a video editor.

After a couple hours of initial research and playing around with the different tools, the whole process of creating American Psycho by Balenciaga took me between three and four hours from start to finish.

Here are all the nerdy details.

- Quick research

- Using ChatGPT to generate Midjourney prompts

- Generating images in Midjourney

- Synthesizing voices with Eleven Labs

- Animating and lip syncing everything with D-ID

- Finding music

- Editing it all together

First, a note on pricing. These tools all have free trial options (except Midjourney, which just stopped providing free trials), but at the end of the day, if you’re making more than one of these videos you’re probably going to blow past the free quota that they give you during the trials. Here’s the pricing details for the tools I used.

- ChatGPT: free, with limitations (ChatGPT Plus costs $20 / month)

- Midjourney: $10 / month (pricing details only available after creating an account)

- Eleven Labs: $5 / month (I use the Creator plan, $20 / month)

- D-ID: $5.99 / month

- Epidemic Sound: $9 / month

Total cost-per-month for these tools comes out a surprisingly clean $49.99, with discounts for purchasing annual plans up-front.

Now that brass tacks are taken care of, we can get started.

Quick research

As most worthwhile projects begin, I got started with a quick YouTube search to find a tutorial. First I watched a video from PromptJungle (which sounds like it’s narrated by AI) and got the gist; then I watched this video by Maximize and locked it all in.

The method I describe below takes what I learned from those two videos (including base prompts from the description of the PromptJungle video, tweaked for American Psycho) and mixes in some of my own ideas.

Using ChatGPT to generate Midjourney prompts

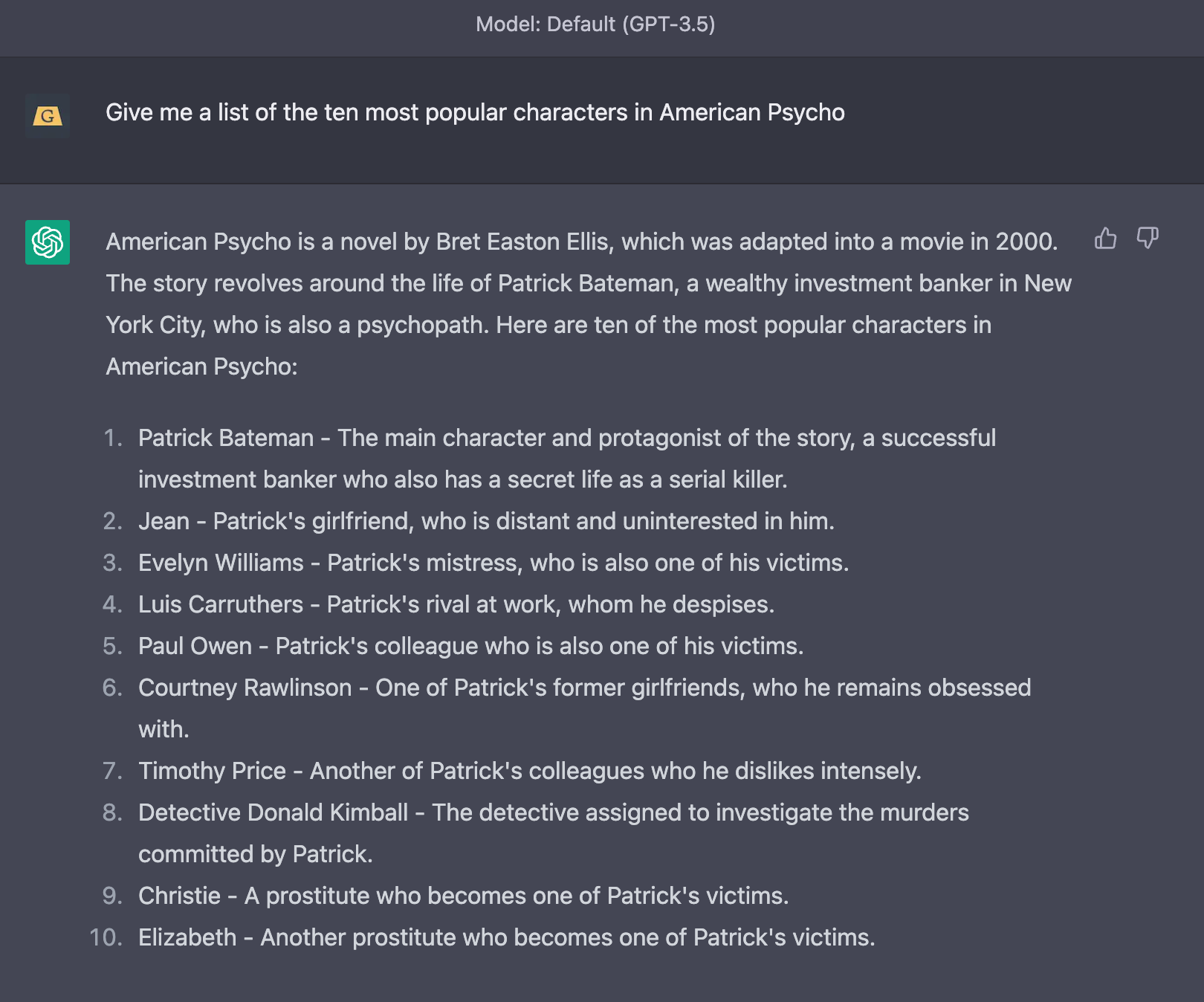

I opened up ChatGPT and, using the Default model, prompted the AI to give me a list of the characters in American Pyscho. Some of the results were a little rough around the edges (embarrassingly, ChatGPT misnamed the pivotal Paul Allen as Paul Owen) but it was a solid effort. That’s what I get for not choosing the GPT-4 model.

Next I set some context for ChatGPT: it is now a Balenciaga fashion designer with 20 years of experience. Here’s the full prompt I used, which is more-or-less unchanged from the PromptJungle tutorial.

Now, act as a Balenciaga fashion designer with 20 years of experience. I want you to dress each of these 10 characters in a unique Balenciaga piece for a runway show. Just write the name of the character and the piece. Remember, it's a 1990 fashion show.

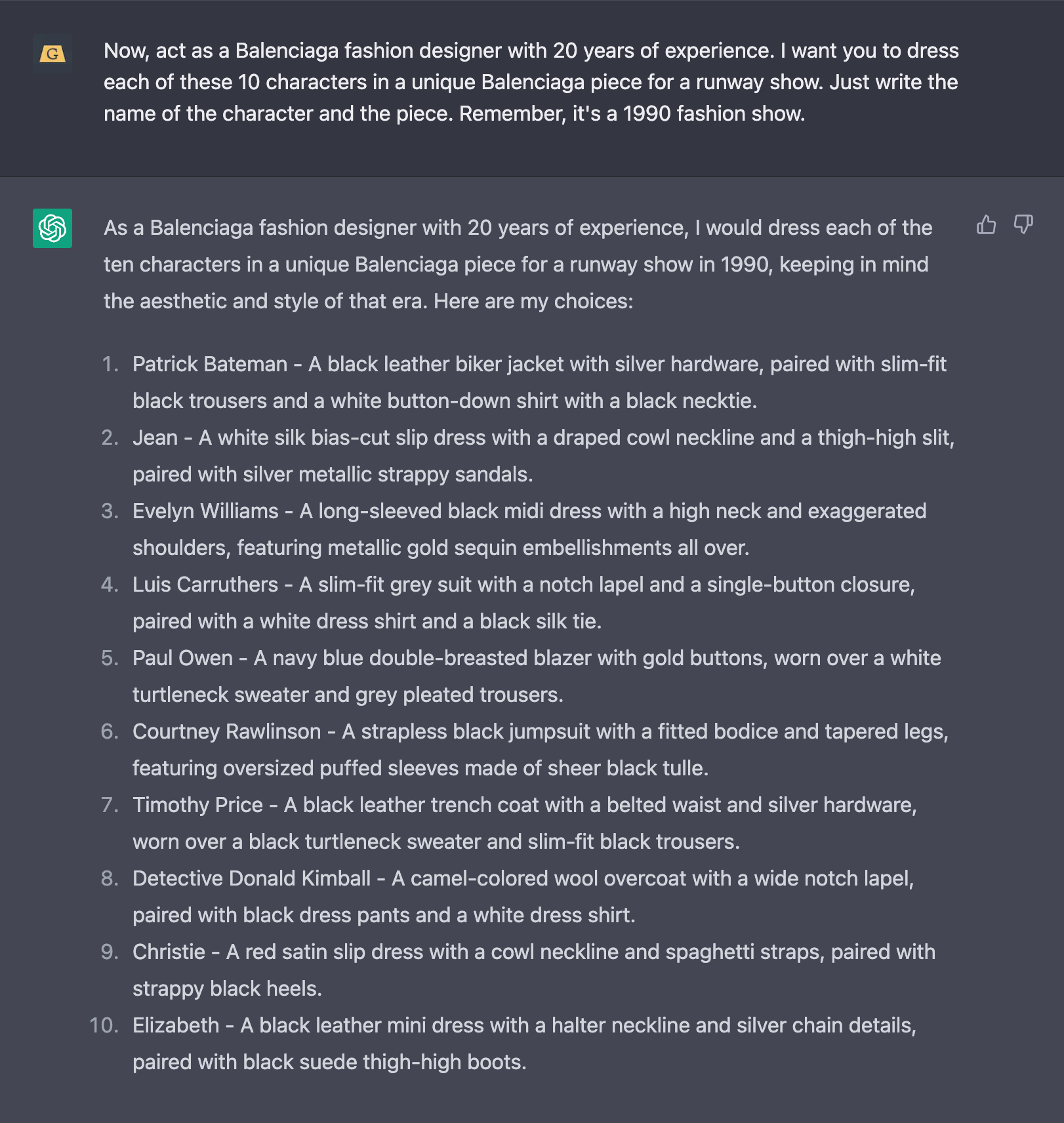

After some quick confirmation that ChatGPT understood what I was asking it to do (this has become a bit of a habit), and then one final instruction, we’re off to the races.

Here are those second and third prompts from the PromptJungle video, with minor tweaks on my end:

Ok, now this is a prompt that I use to generate images from another AI image generation tool called Midjourney: '1990 screengrab of striking female model Hermione Granger wearing a grotesque black Balenciaga dress, fashion movie scene, Balenciaga commercial --ar 16:9 --v 5'. Just say 'Y' if you understand.

I want you to rewrite the prompt but for every character, with the clothes you chose for them. Remember to write the prompt exactly as I pasted it, with the same format and parameters. Only change the gender of the model, the name of the character, and the description of the clothing to fit each character. Do you understand? Please ask if you need more information.

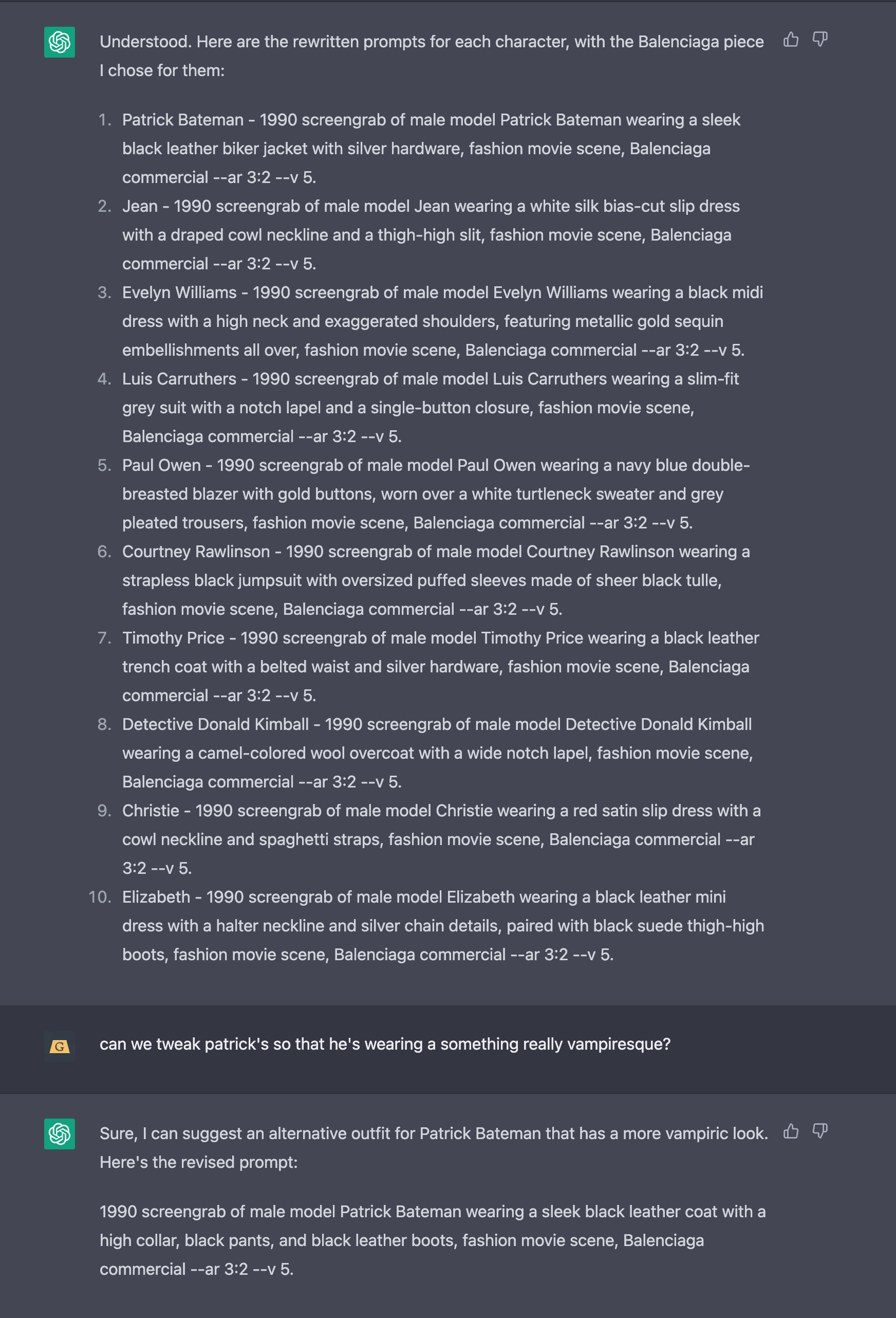

And here are the results, which I then began pasting into a chat with Midjourney.

This is your reminder that generative AI rarely gets it right on the first time — I went back and forth with ChatGPT on a few different prompts after I quickly realized that it messed up again and forgot to change “male model” to “female model” in the prompts for the female characters. ChatGPT is so quick that it’s relatively painless to go back and forth like this.

It remains to be seen if AI will need this kind of human guidance forever, or if it’s just a temporary limitation. For now, I take solace in knowing that I was one “executive producing” this video — even though the AI tools did all the manual labor.

Generating images in Midjourney

Midjourney has halted free trials ever since the explosion of people using the product to create deepfakes of the Pope (the CEO of the company told The Verge that these events were “unrelated”). The personal plan is affordable enough at $10 / month (they only show pricing after you’ve signed up on a trial basis).

A quick primer on Midjourney: the entire system is based in Discord. Once you’re logged in, you can use the command /imagine in a chat with Midjourney to generate images. The AI provides four options for every prompt, leaving you to decide which one of the four variations best represents your vision. Then you can upscale the ones you like, or generate further variations if you want to tweak certain details. Things get more advanced from there.

--ar 3:2 which is a 3:2 aspect ratio, ideal for portraits — but the standard widescreen video format is 16:9. I’ve amended any prompts included here so that you don’t make the same mistake I did. If you use ChatGPT to help write your prompts, make sure it doesn’t make a mistake, either.This was the second most fun part, after editing it all together. Here’s a sample of what my prompts to Midjourney looked like:

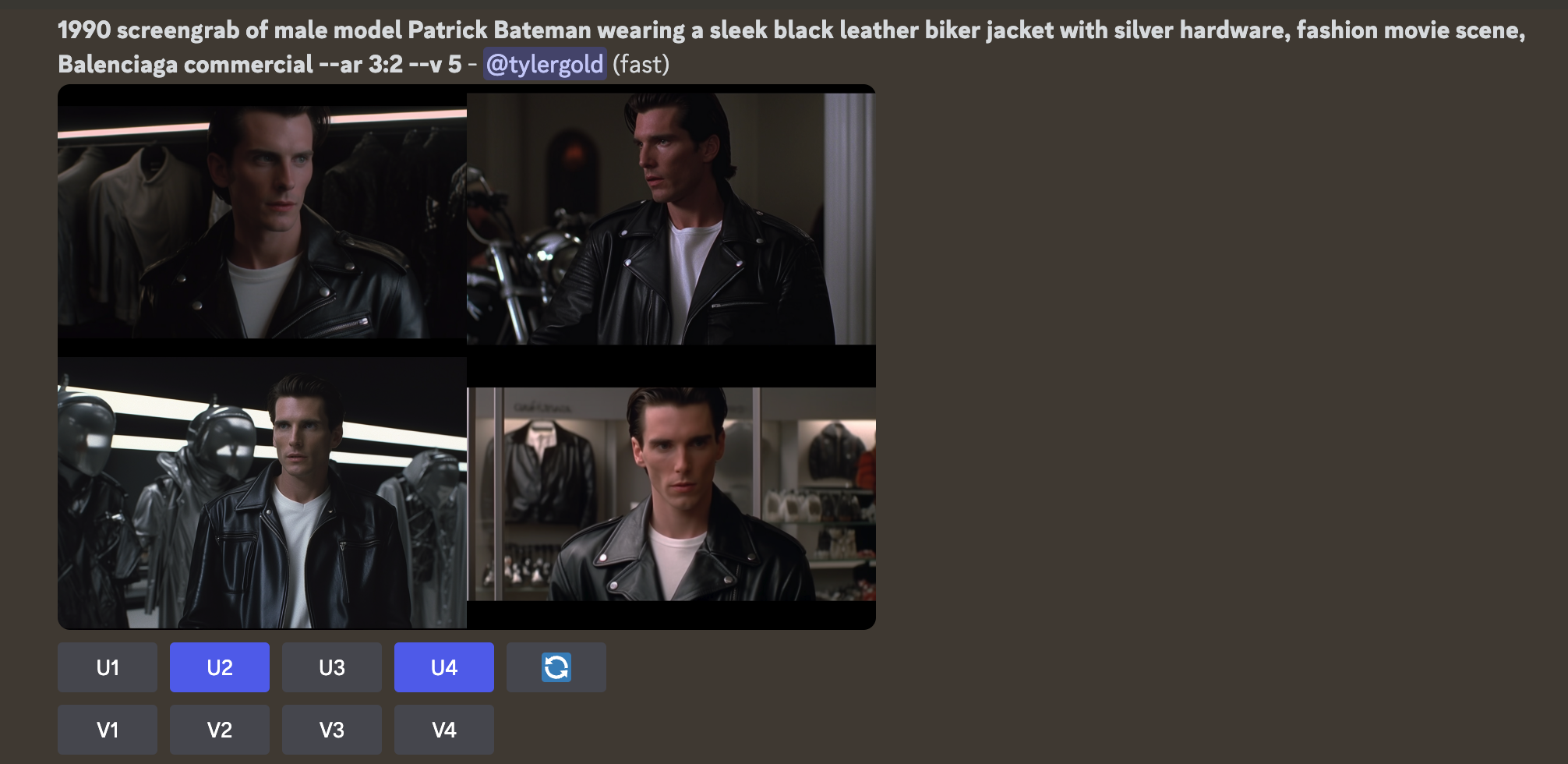

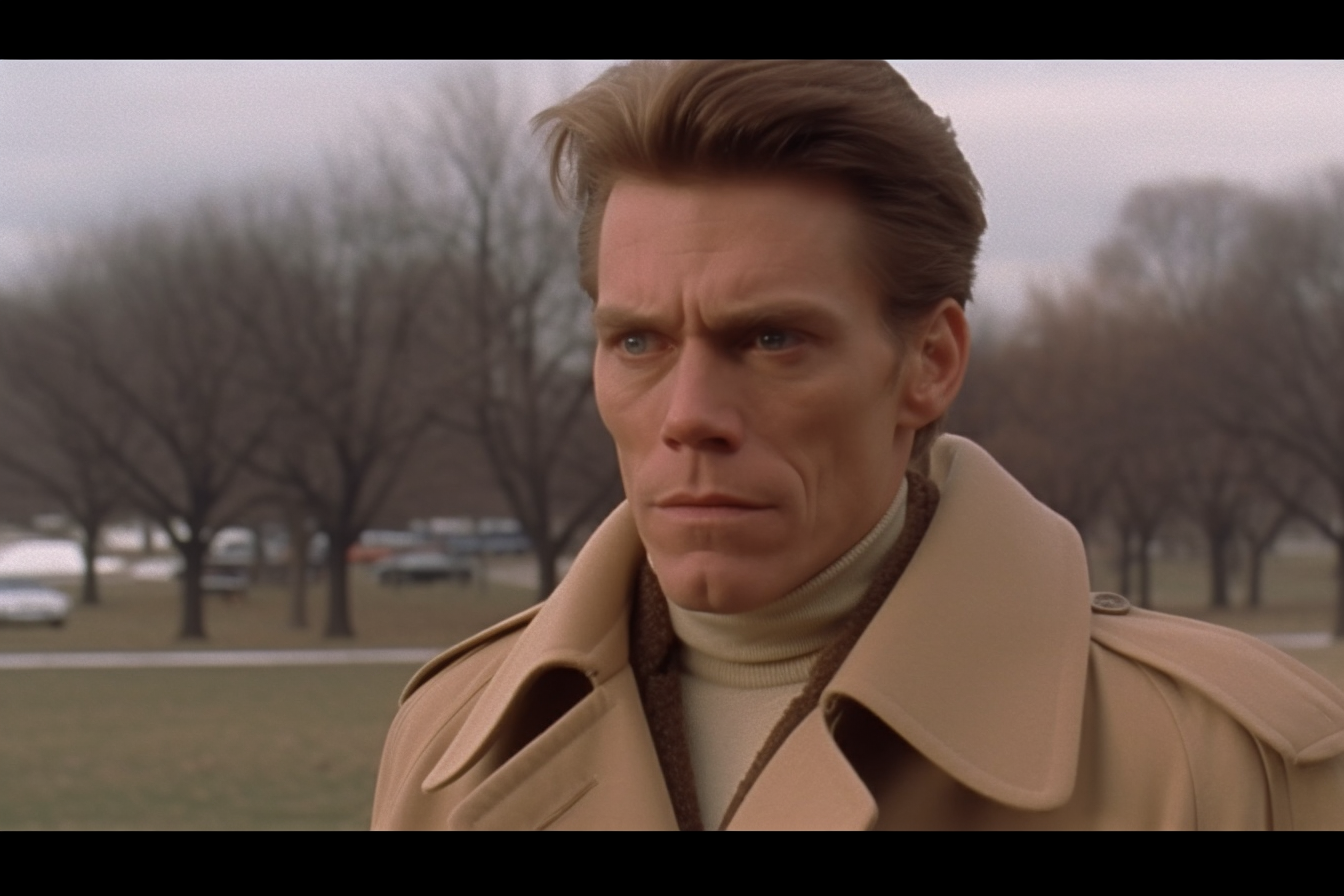

1990 screengrab of male model Patrick Bateman wearing a sleek black leather biker jacket with silver hardware, fashion movie scene, Balenciaga commercial --ar 16:9 --v 5

I liked the second and fourth options here — the fourth one is the image I ended up using for the opening scene of the video. He kinda looks like Ponyboy from The Outsiders. I went through this process about a dozen more times to get all the images I needed, making tweaks to prompts to hone the styles in and upscaling the ones I liked.

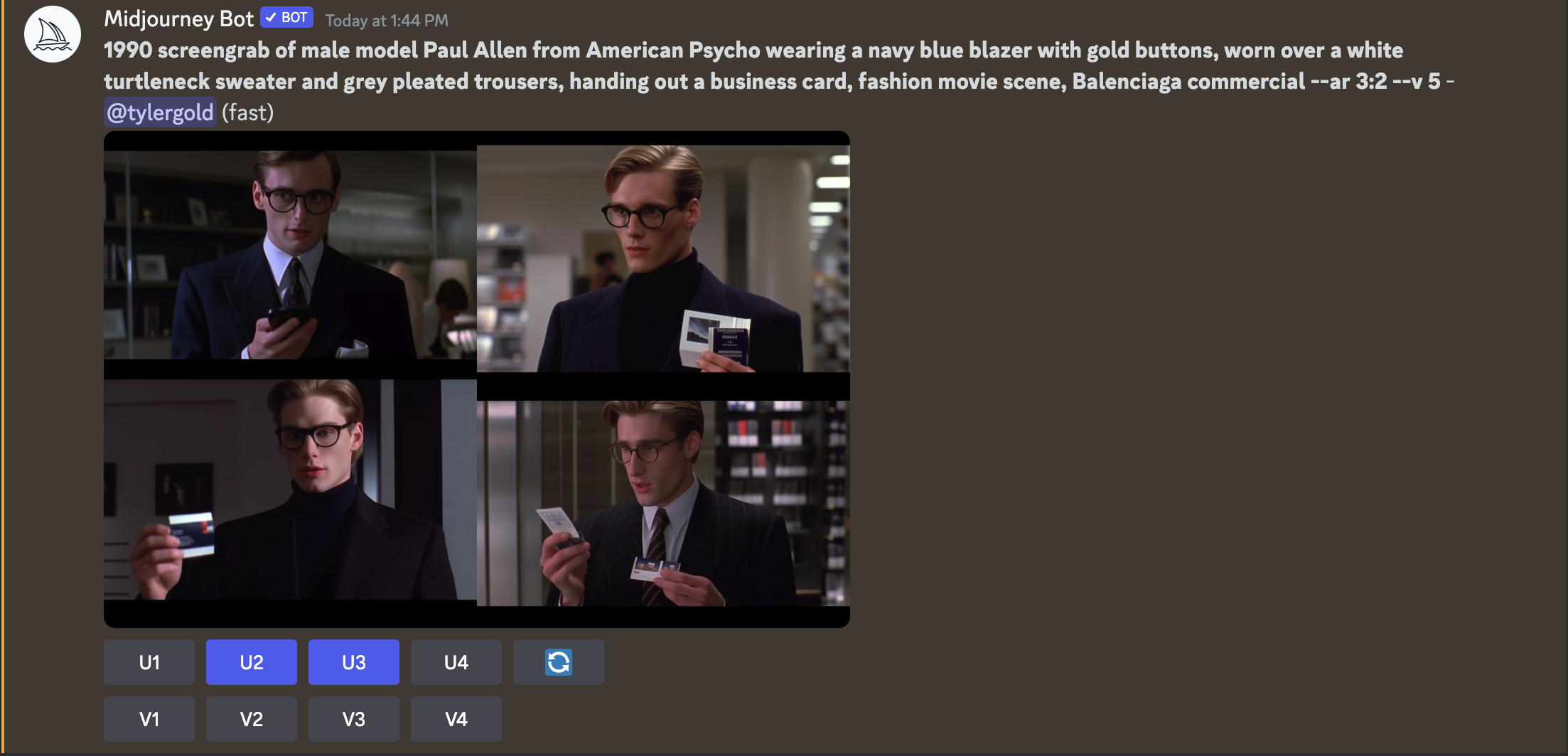

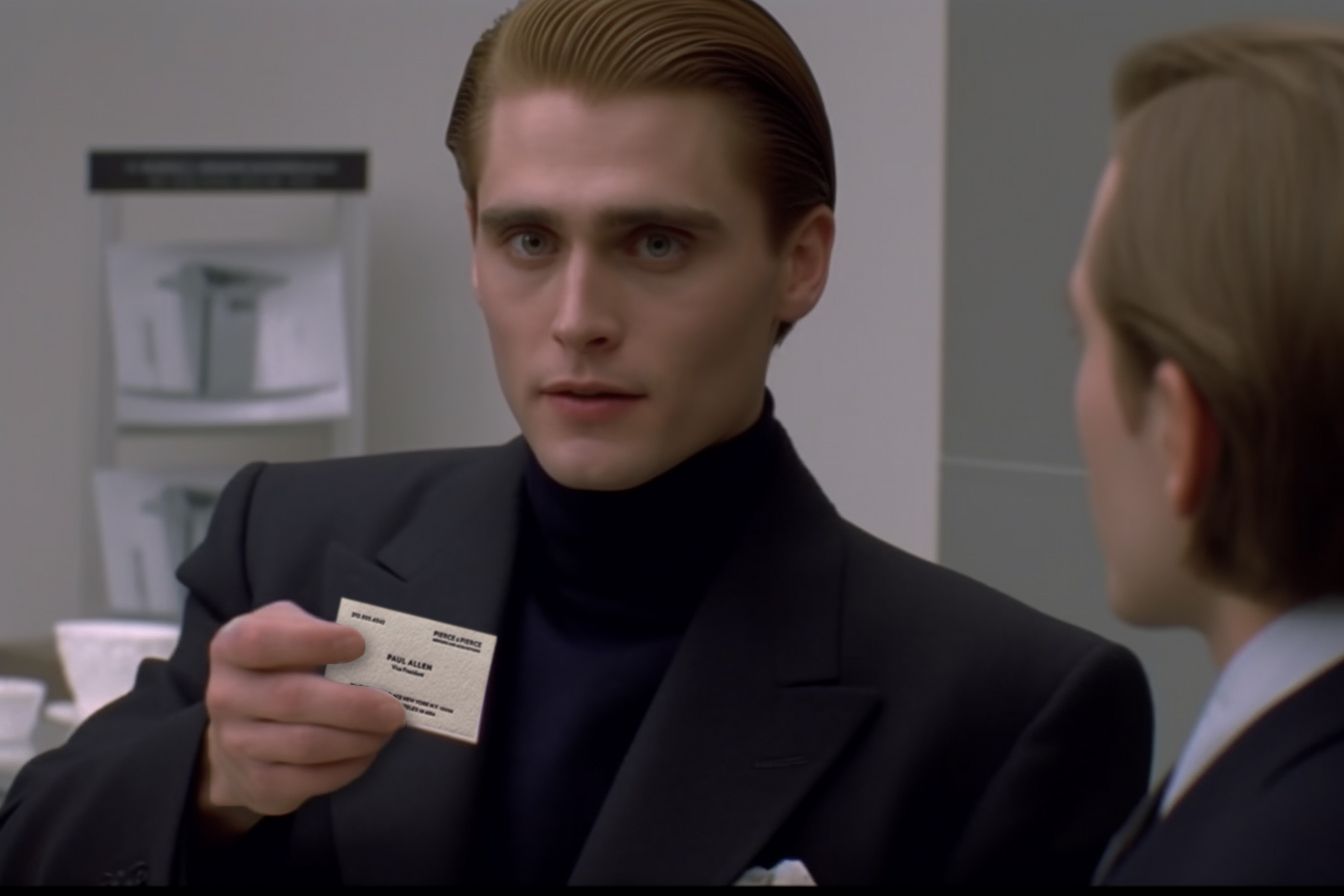

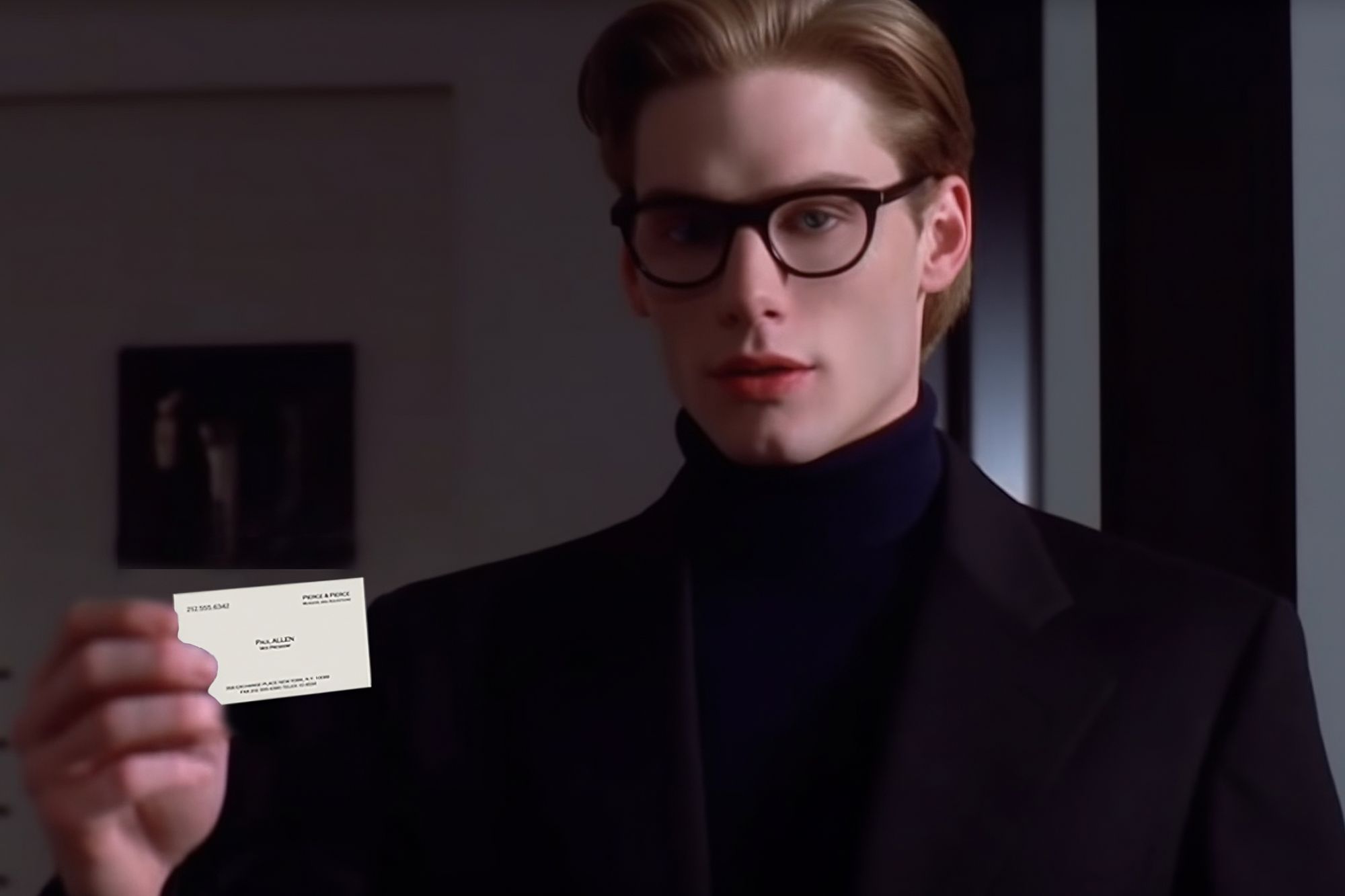

Let’s see Paul Allen’s card

The most important step was adding a little bit of a human touch: for the images of Paul Allen — who famously has the best business card of any Vice President at Pierce and Pierce — I tweaked the prompts from ChatGPT to make sure that Paul was holding his notorious business card.

The famous business card scene.

Midjourney gave me some very solid results — these were all from a single prompt. Only the fourth variation missed the mark. I didn’t bother upsizing it.

Then I found an image of Paul Allen’s card on the internet and went to Photoshop to take things to the next level. A few minutes of editing and we have something special.

The images of Willem DaFoe are my favorite, and fittingly for the most human character in the movie, these feel the least Balenciaga. Impressively, ChatGPT completely nailed the vibe I was looking for without me even having to make edits to its prompt. Midjourney took it from there.

Here’s a gallery of some of the images that were cool, but didn’t make it in the final video for various reasons.

Now that I had all the final images, it was time to write these characters some dialog and synthesize their voices.

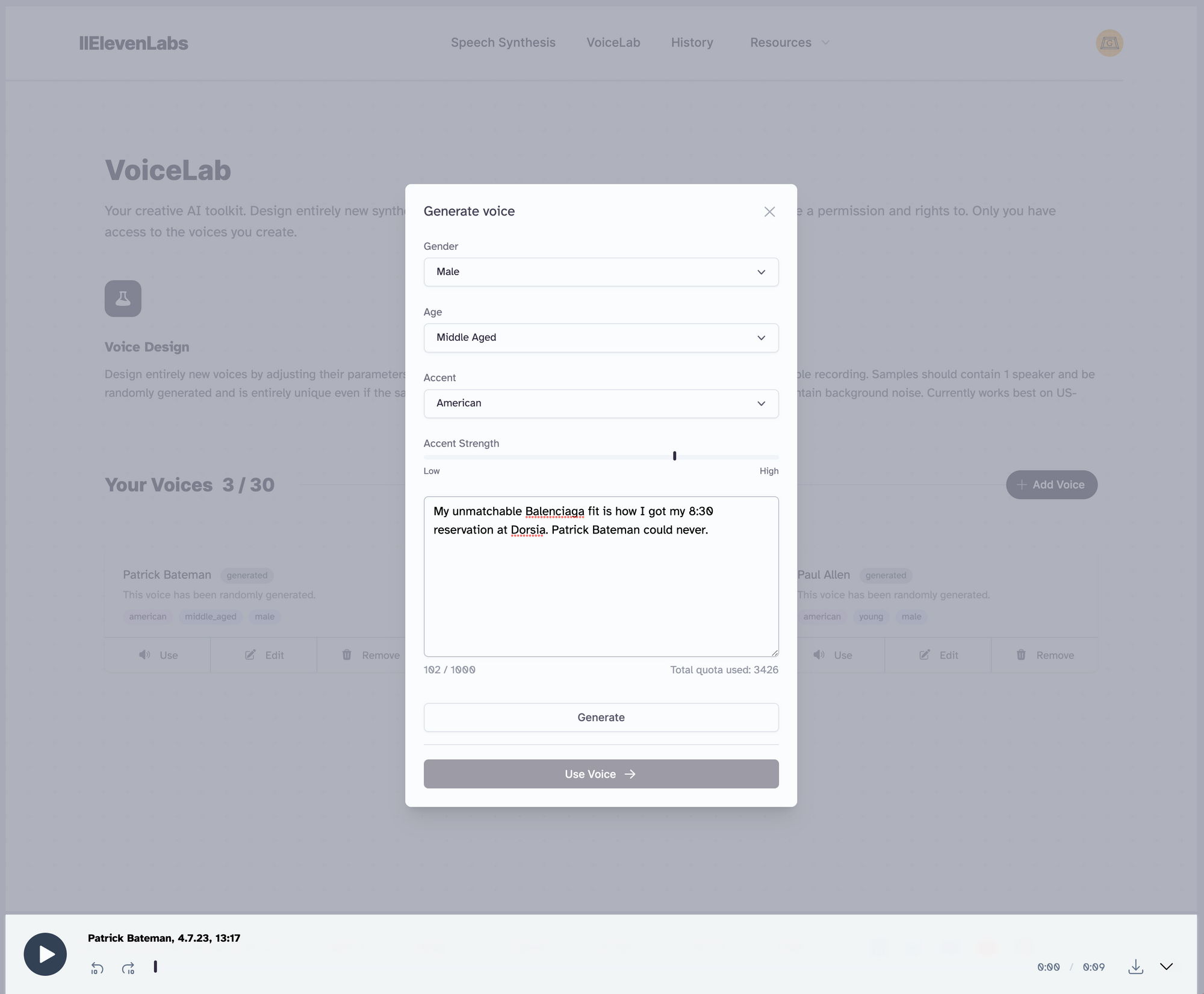

Synthesizing voices with Eleven Labs

I’ve been using Eleven Labs for quite a while to synthesize voice narration for articles I write here on Gold’s Guide. The voices that their tool is able to generate are incredibly convincing.

Eleven Labs has an option for cloning a voice based on a sample, but I didn’t want to clone Christian Bale, Jared Leto, or Willem DaFoe’s real voices due to concerns about copyright, so I used Eleven Labs custom voice option to create some voices that are close enough. It took me a couple minutes to fine-tune the voices, and then I had some fun writing the dialog you hear in the full video. Eleven Labs even generated the creepy laughter from the maitre-d after AI Patrick tries to get a reservation at Dorsia.

You can generate custom voices in various styles my playing with the gender, age, accent, and accent strength settings. Each time you click “generate” you will use some of your limited quota — and you’ll also get a completely new, random voice. Once you find one you like, hit “use voice” and it’ll add that personality to a library so you can re-use it whenever you want.

Have fun with this part. It’s easy to use ChatGPT to write the dialog, but in my opinion that takes away from the fun of it. This is where your creativity can shine, not the AI’s.

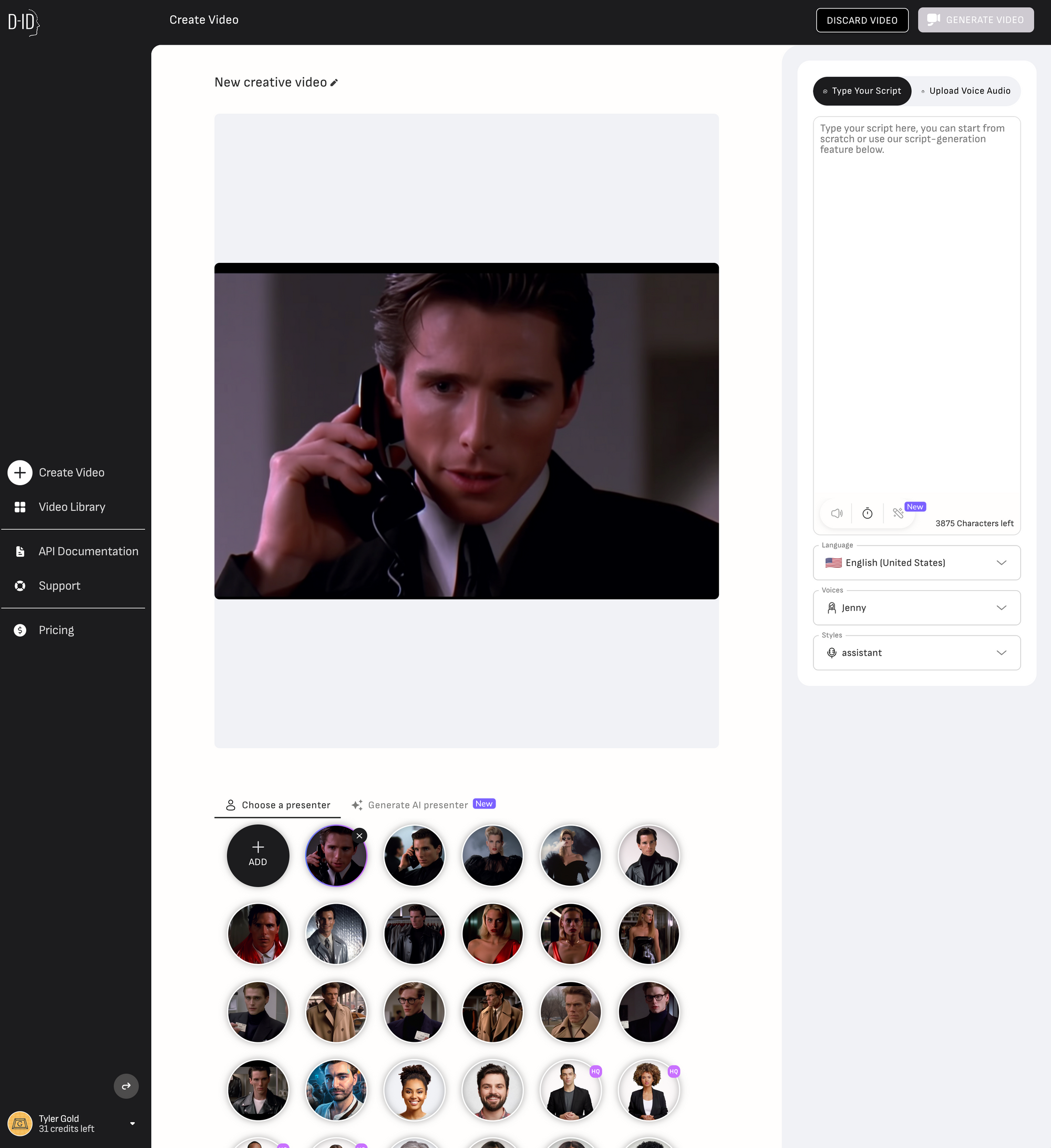

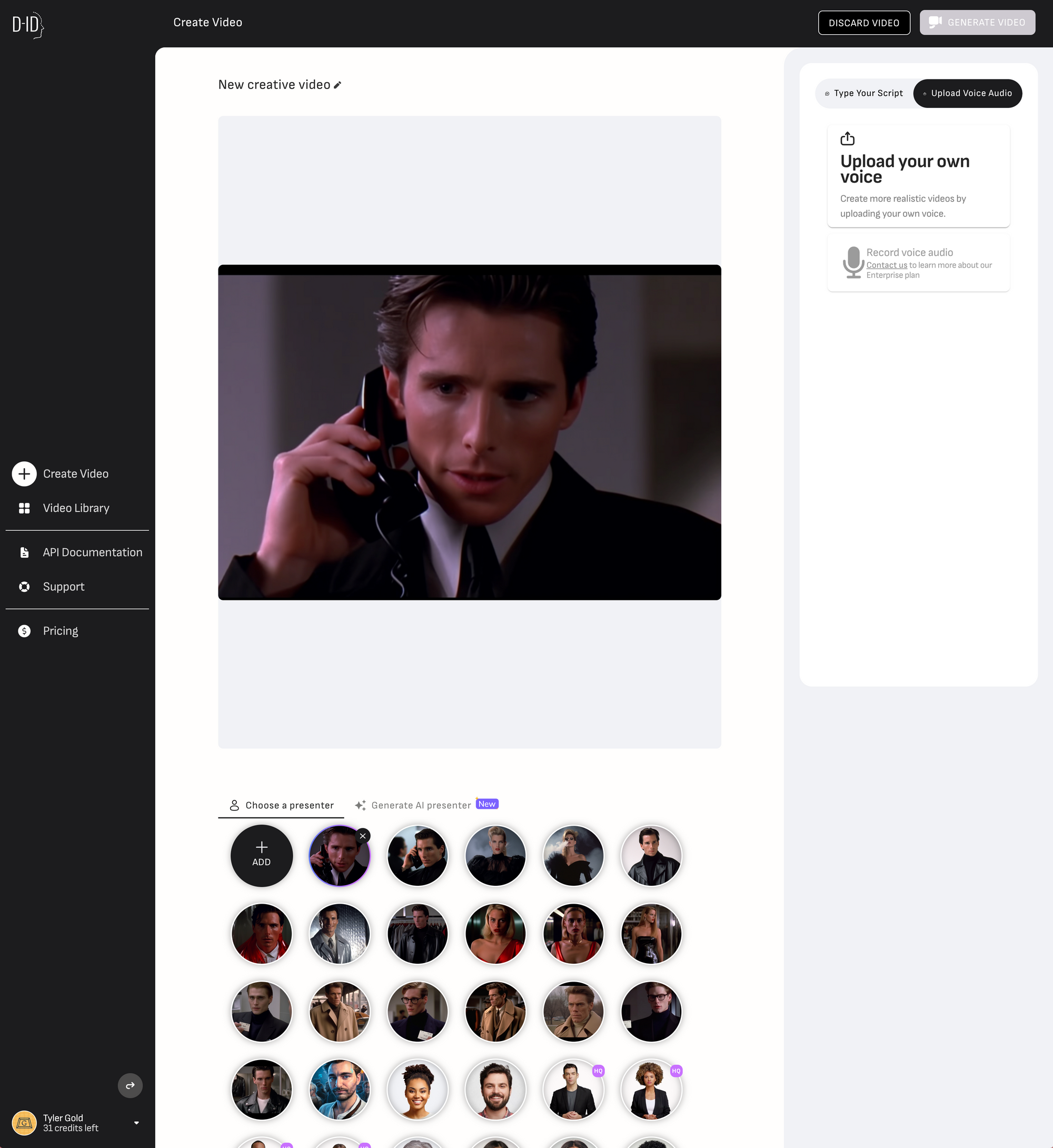

Animating and lip syncing everything with D-ID

This is the part that’s the most new to me, but interestingly it was also the simplest. D-ID provides 15 free animations to start, with an option to use one of their pre-made avatars or to upload any image of your choice, where we’re going to upload the various images we just made in Midjourney.

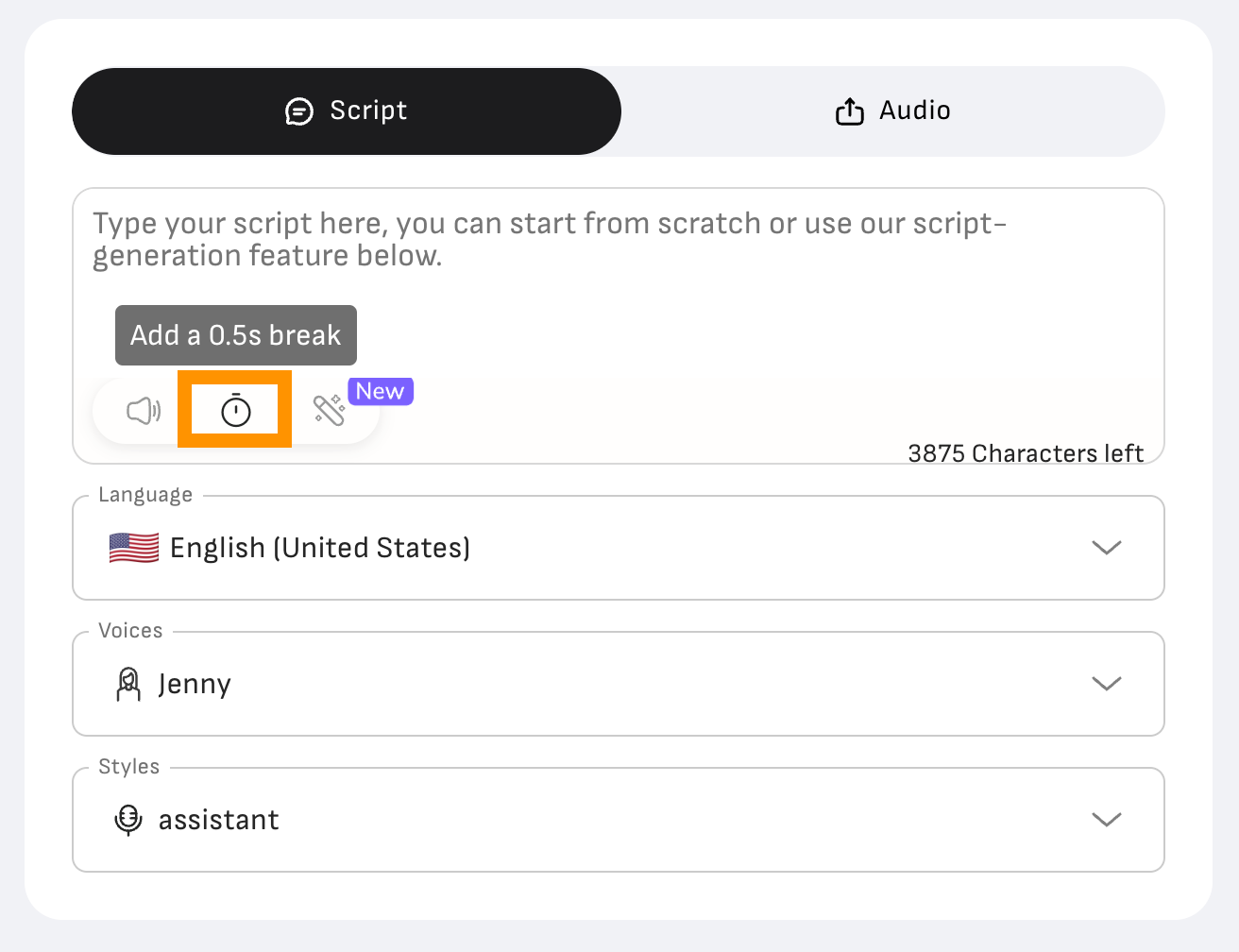

The interface is very simple. Click to the right to “upload your own voice” and then upload the files we generated with Eleven Labs.

Just upload your image as a custom avatar, and then you can have D-ID generate a voice for you (Eleven Labs does it better, which is why we used it before) or to upload any voice we want, including the ones we already generated with Eleven Labs.

The result is equally stunning and uncanny.

But if you watch closely, it‘s a little rough around the edges.

D-ID charges something like $50 / month to get rid of their watermark. They know it’s worth it. I signed-up for the entry level plan so I could create enough animations.

There’s a simple library interface to download all your previously generated videos.

Pro-tip: to animate characters without any dialog, use the little clock button to add a half-second pause.

You can just add like, ten or twenty of these little pauses and D-ID will generate a video where the character isn’t talking that lasts for a few seconds.

Finding music

All of the Harry Potter videos used the same song, and I wanted to use the same one since it’s kind of become the theme song for these memes. It’s called “Lightvessel” by an artist called Thip Trong, available as part of the library that Epidemic Sounds licenses as part of their subscription program for music. It’s also available to purchase individually — music licensing costs are often surprising to the initiated.

You can, of course, use any music you are inclined to use, within reason and so long as it’s licensed properly. I always make sure I have the rights to use whatever song I end up choosing. Once that was set, I downloaded the track and opened up iMovie.

Editing it all together

This was probably the most fun part. It didn’t even take that long.

The timing is a key part of making this whole thing make sense. You have to cut at the right moment. The song serves as the base layer for the entire video, and helps inform the timing for how long each shot appears on screen and for cutting between scenes.

I created a flash / camera shutter effect (Capcut will do this for you) and added in them throughout the video when I felt they were additive. If you want to learn more about editing, pacing, and storytelling — especially for the internet — The Editing Podcast is a great resource.

I spent a little less than an hour editing the whole thing together on a quiet Saturday morning — going through about three iterations before landing on the version you can watch now that you know the full context.

AI is already automating this: Wingardium Balenciaga, “a totally automated fashion show that keeps on going, forever,” infinitely recreates new variations of this on its own. What’s next?