If ChatGPT being released wasn’t enough mind-blowing AI progress for you, fall 2022 also saw the image-editing app Lensa release a new AI selfie generator — which temporarily propelled it to the #1 spot in the US App Store.

Obviously I had to try it.

What exactly is happening here?

Lensa is an app for retouching and editing images. It’s mostly used for cutting people out of backgrounds to apply more fun settings to your photos, but there are also some impressive retouching and editing tools too. Basically it simplifies a lot of the stuff pro editors might use something like Photoshop for. I like technology that simplifies complicated systems.

I hadn’t heard of Lensa until this new feature was released, but I do remember its sibling Prisma, another cool app which transforms photos to look like paintings.

The process

After downloading the Lensa app, I followed the quick instructions to set things up and then I uploaded a dozen pictures of me. Most of them were selfies but a few were photos other people have taken of me.

The app specifically mentioned that there should be no other subjects in the photos, so I made sure to only upload images where I was alone.

I paid for the “most popular” choice which generated 100 avatars and cost me $11.99.

It took a couple hours to get my avatars. I just went about my day and got a notification later that evening. Very simple process.

The results

Hard to deny how impressive these are.

For reference, here is a real photo of me:

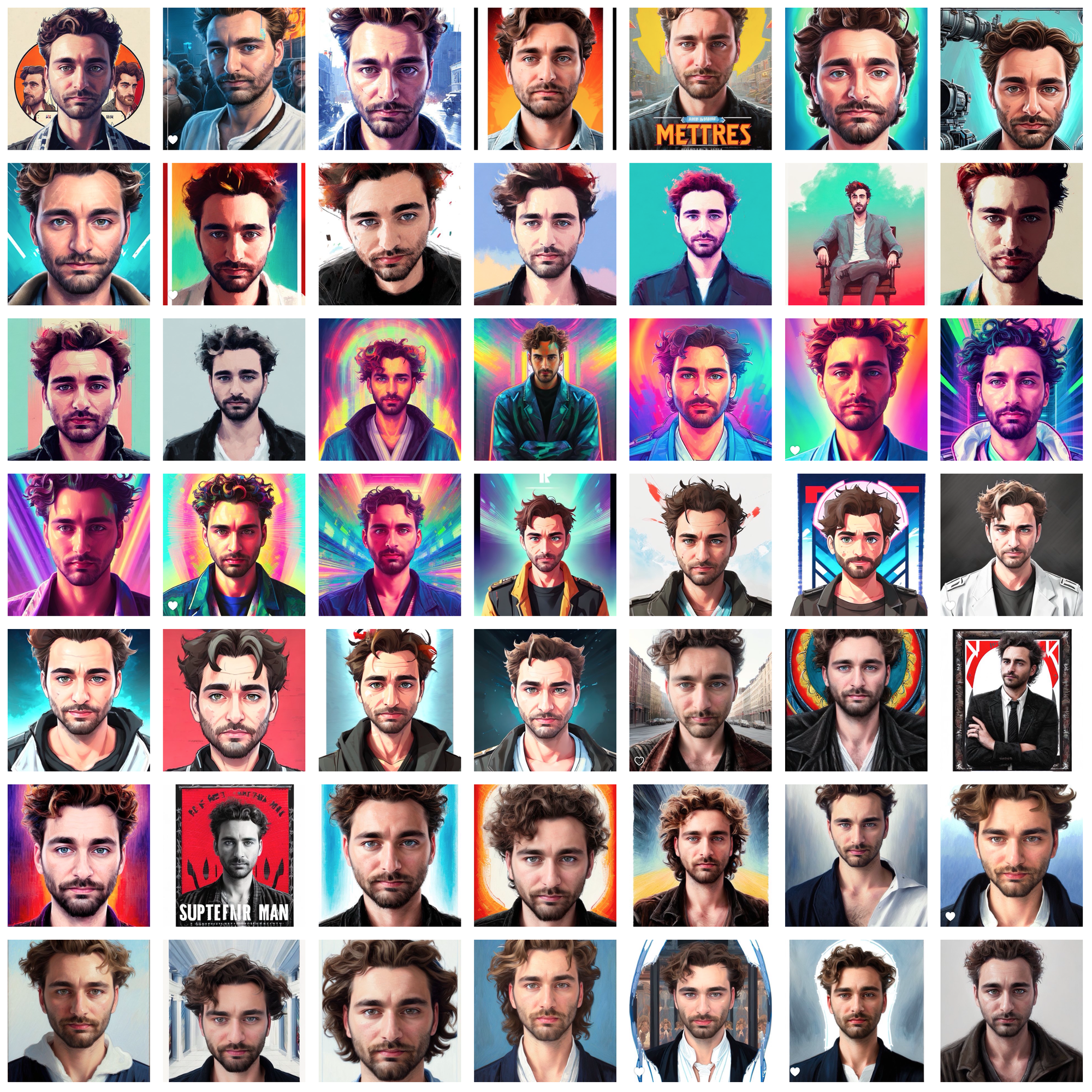

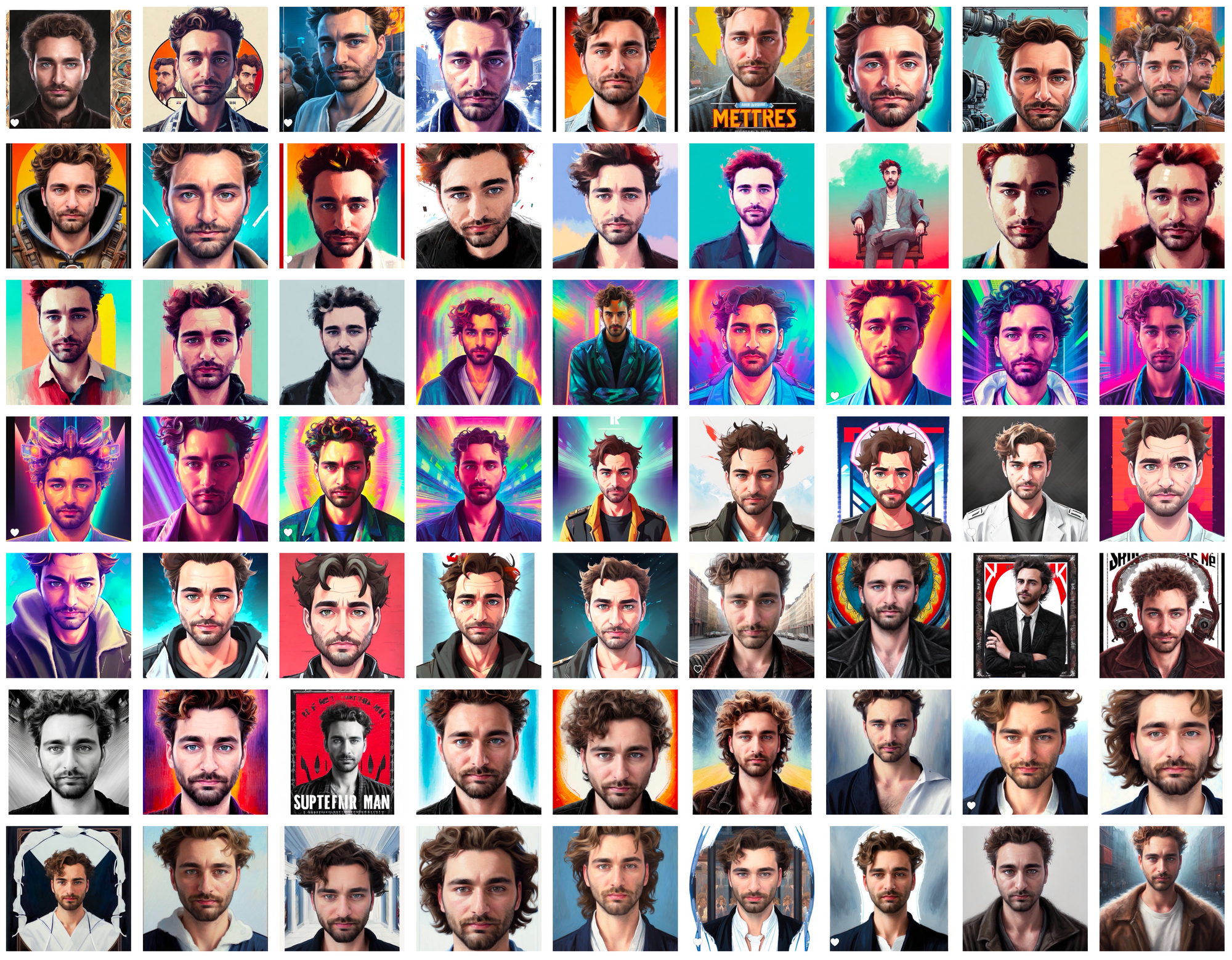

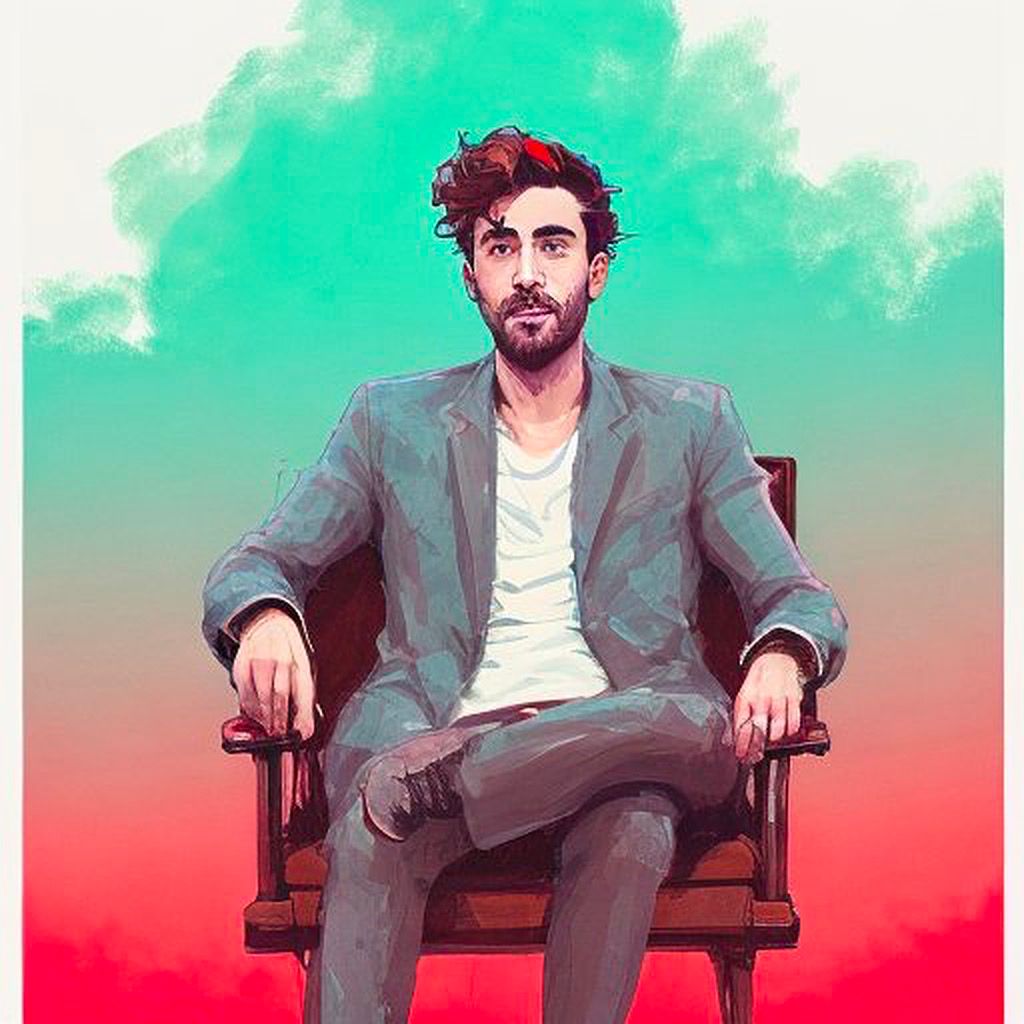

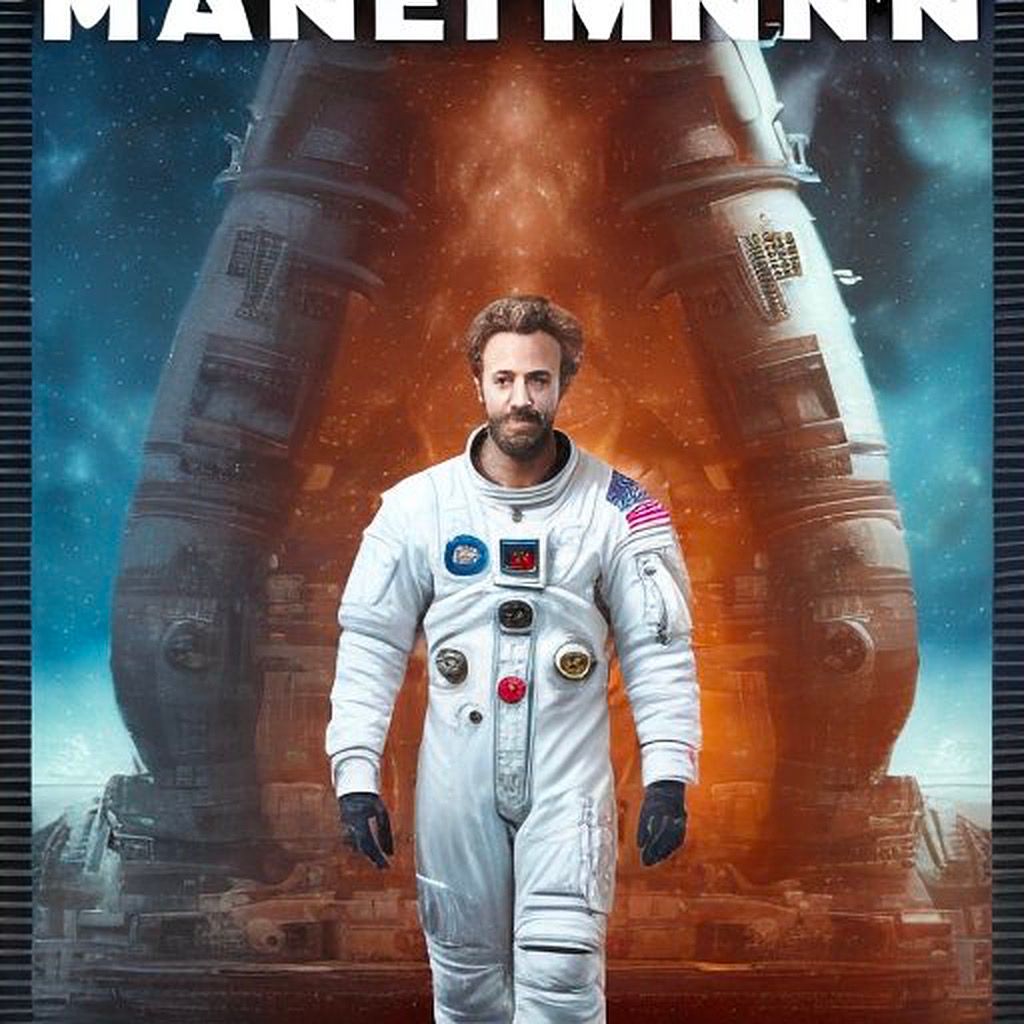

And here are some of the more impressive avatars Lensa generated:

I’m genuinely impressed. Yes, some minor details are off — my eyes came out a bit more blue / gray than they actually are.

But if an artist sent me any one of the above portraits I would be excited about it. Lensa generated 100 of them for me.

My experience using Lensa was overwhelmingly positive.

It does get weird

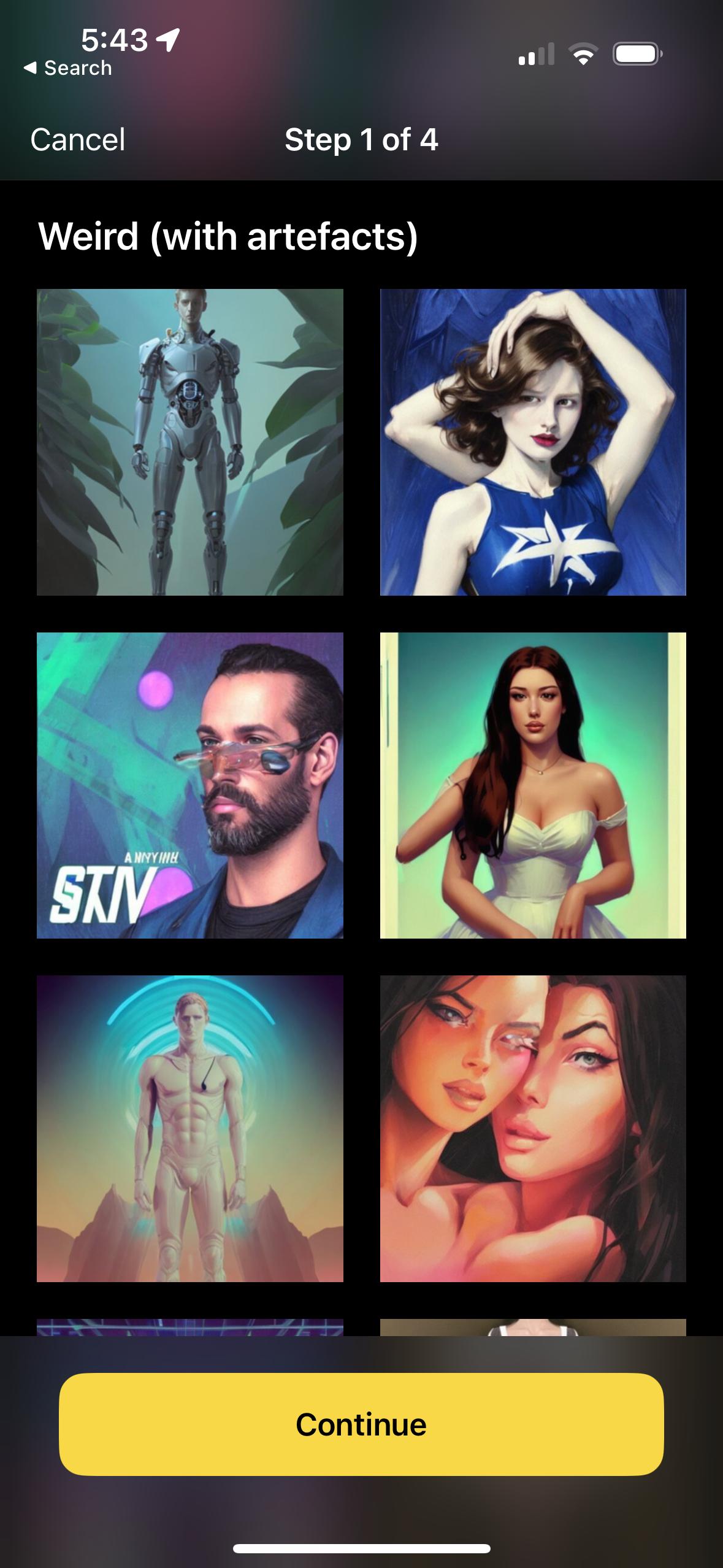

But that’s not to say that some of the results weren’t utterly bizarre. Beacause they were. I had a few that clearly do not look like me and a couple others that are just... wrong.

The multiple-personality movie poster in the bottom right is the weirdest one but it’s also pretty funny it gave me a third leg in the top left.

Lensa says these have “artefacts”, which I think is the British spelling for what I would call “artifacts”. Really weird.

It’s interesting that even though these models are machines, their output is difficult to predict. When I think about machines, I think about predictable outcomes. I think about systems. I think about rules and binary code and calculators and arithmetic. 1+1=2.

There is a certain level of “hallucination” that these models all require in order to generate content. Sometimes Lensa will give you two heads or mess your eyes up. Sometimes ChatGPT will confidently write 800 words and be totally wrong about the main thesis of its essay.

Clearly the photos you upload have an impact here, but the model itself has its own, different type of impact. These machine learning models are biased by the datasets they are trained on.

That’s why it was disappointing, if not entirely unsurprising to see people criticizing Lensa for hyper-sexualizing their avatars and for inserting “mangled” signatures in the corner of some generations.

I’m cropping these for privacy reasons/because I’m not trying to call out any one individual. These are all Lensa portraits where the mangled remains of an artist’s signature is still visible. That’s the remains of the signature of one of the multiple artists it stole from.

— Lauryn Ipsum (@LaurynIpsum) December 6, 2022

A 🧵 https://t.co/0lS4WHmQfW pic.twitter.com/7GfDXZ22s1

Polygon has a good explaination of how this happened:

Lensa uses Stable Diffusion, an open-source AI deep learning model, which draws from a database of art scraped from the internet. This database is called LAION-5B, and it includes 5.85 billion image-text pairs, filtered by a neural network called CLIP (which is also open-source). Stable Diffusion was released to the public on Aug. 22, and Lensa is far from the only app using its text-to-image capabilities. Canva, for example, recently launched a feature using the open-source AI.

An independent analysis of 12 million images from the data set — a small percentage, even though it sounds massive — traced images’ origins to platforms like Blogspot, Flickr, DeviantArt, Wikimedia, and Pinterest, the last of which is the source of roughly half of the collection.

More concerningly, this “large-scale dataset is uncurated,” says the disclaimer section of the LAION-5B FAQ blog page. Or, in regular words, this AI has been trained on a firehose of pure, unadulterated internet images.

Brutal, even if this makes the results less surprising. This is the internet we’re talking about, after all.

A major challenge for future AI models will be curating the datasets that the models are based on — one can imagine a future “purity” score for models that are trained on datasets which were been reviewed by humans (or maybe even AI?) for accuracy and relevance in a certain field.

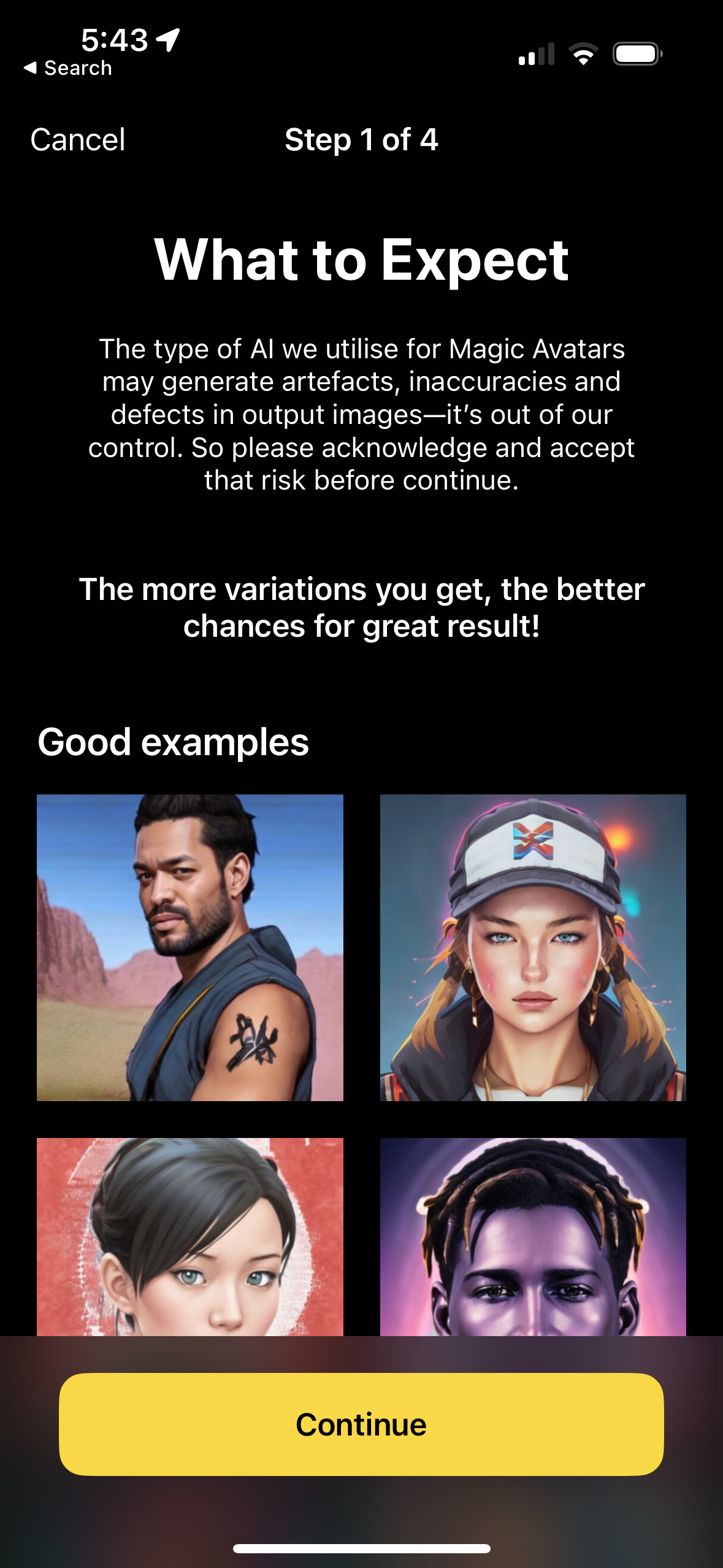

Seems like they picked some relatively inoffensive examples for ”Weird”

The What to Expect section above that Lensa shows you before uploading images could benefit from some more specificity.

This app is the first interaction with AI that many people are going to have. Considering that, there is a certain responsibility that Prisma Labs, the company building Lensa, has resting on its shoulders.

Should Lensa disclose that their model is trained on an open source, uncurated dataset that has never been fully reviewed? Is this biased, since it shows stereotypes? Or is it unbiased, since it shows the AI’s raw perspective on data that humans uploaded to the internet?

The privacy issues

I haven’t posted these Lensa portaits of me on any social network (just here). But I have showed a few friends... and they all said the same thing.

“Now they own your face.”

The irony! My face has been uploaded to Twitter and Instagram and Facebook and who knows where else. No one ever reads the privacy policy until it’s too late. Most importantly, Prisma isn’t incentivized to store my data. It’s literally too expensive.

They’re a small company. I’m far more concerned about a company like Oracle collecting this kind of data — since they actually have the resources to leverage it all.

As soon as the avatars are generated, the user's photos and the associated model are erased permanently from our servers. And the process would start over again for the next request.

— Prisma Labs (@PrismaAI) December 6, 2022

Prisma’s whole Twitter thread is worth reading. I find their response to be perfectly satisfactory, even if I disagree with their backtracking conclusion that compares AI to accounting software. That ain’t it.

I’m not sure if I’ll ever post these Lensa portraits to socials, let alone use one as a profile photo. I wish that other people didn’t have bad experiences. I think the app needs to do better providing warnings of what might go wrong. But I’m glad that I tried it. I got good results and it’s fun to look through them with family and friends and honestly got enough joy from using it that I might do it one more time.