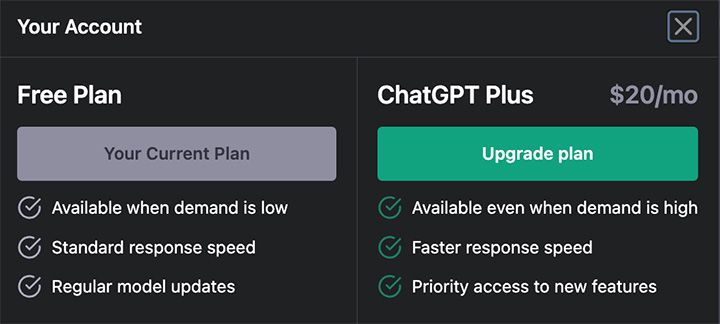

OpenAI released their much-rumored Plus version of ChatGPT on 9 February 2023.

For $20 per month, subscribers get guaranteed access to ChatGPT, even “when demand is high”. The pro tier also includes early access to experimental features — right now, OpenAI is testing a new Turbo version that, at least in early testing, can run over 11x faster than the default model.

turbo is 11.6 times faster here pic.twitter.com/G6DS7f86fm

— Morten Just (@mortenjust) February 11, 2023

This aligns with my experience. Some people in the replies to that tweet noted that the Default model provided a longer answer, which would theoretically take longer to generate (more tokens = more compute = more time). I actually think this demonstrates a fundamental difference between these two models.

The Turbo mode seems faster and more concise than the default version. These two things are mutually exclusive — Turbo isn’t faster because it’s more concise, it’s simply just faster (and also, unrelatedly, happens to be less verbose).

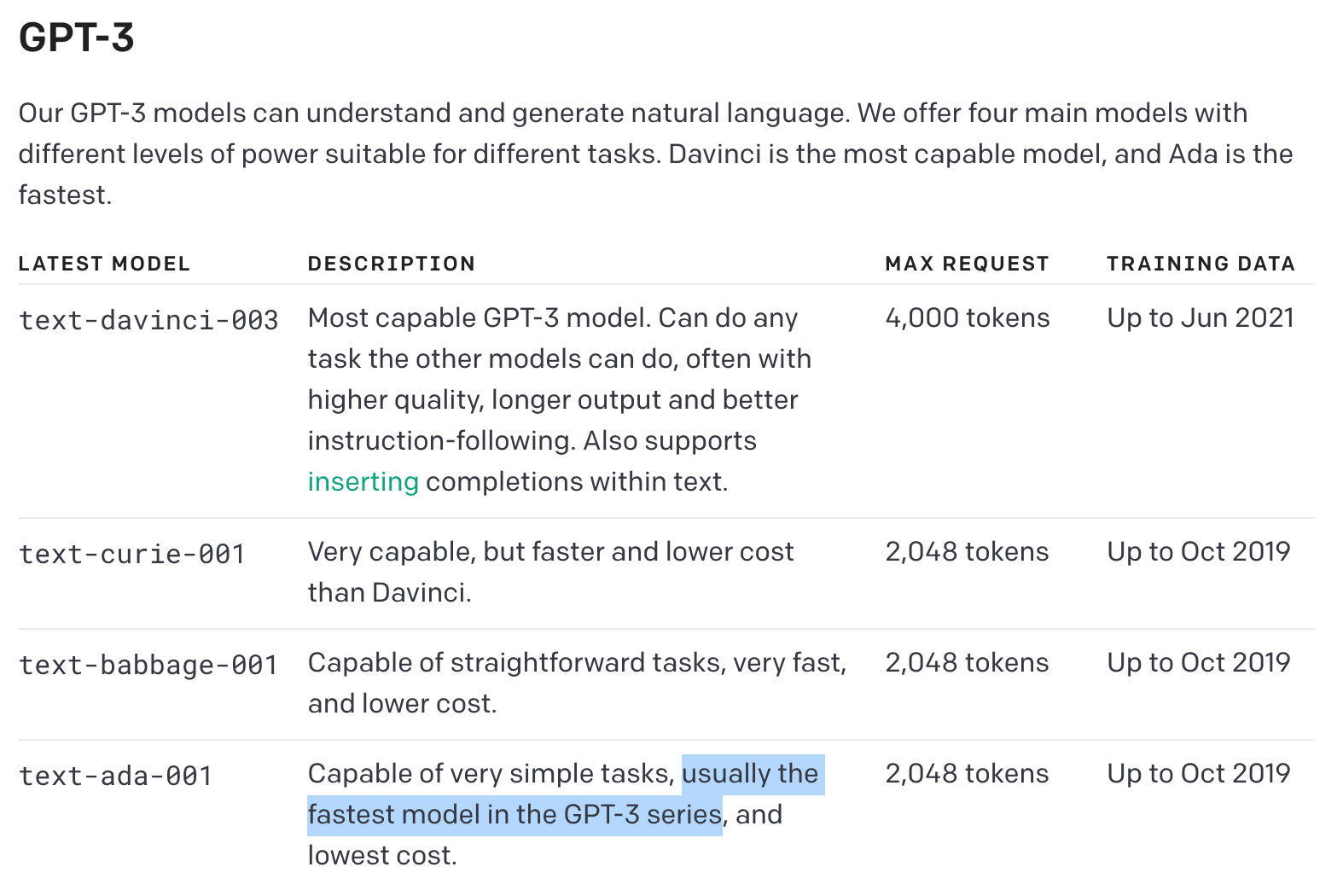

My guess is that this new Turbo mode is based on one of the alternate models of the full GPT-3 model, probably Ada, which OpenAI’s documentation notes is their fastest (and least costly) model.

I think the Default mode is based on Davinci, which is probably why it’s more verbose.

Since I already have an OpenAI account with a credit card attached (I spend about $20 per month on DALL-E credits to generate the images in feature articles), getting set up for ChatGPT Plus was a breeze.

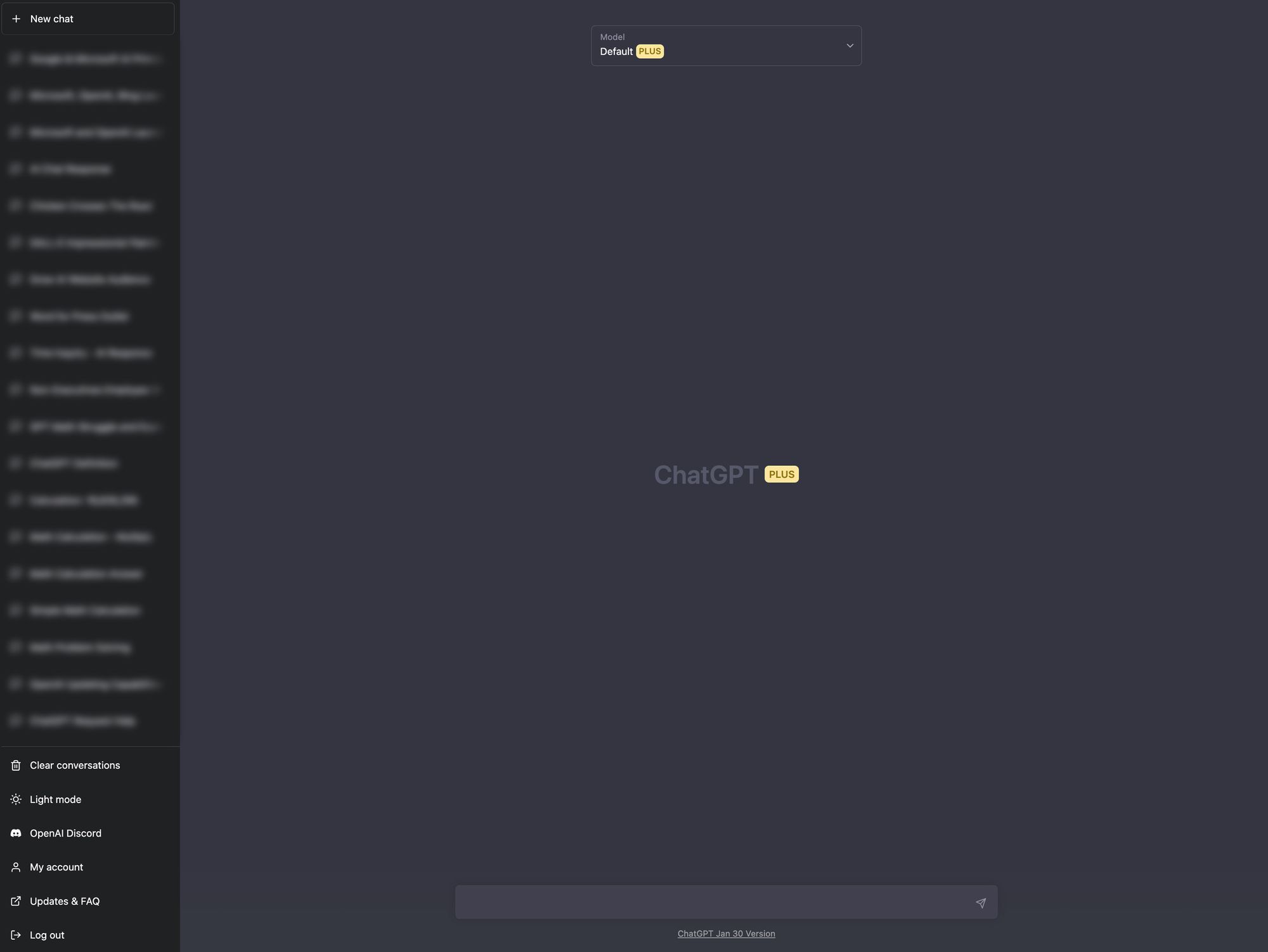

A little gold button appeared in the bottom left corner menu; I like the color choice.

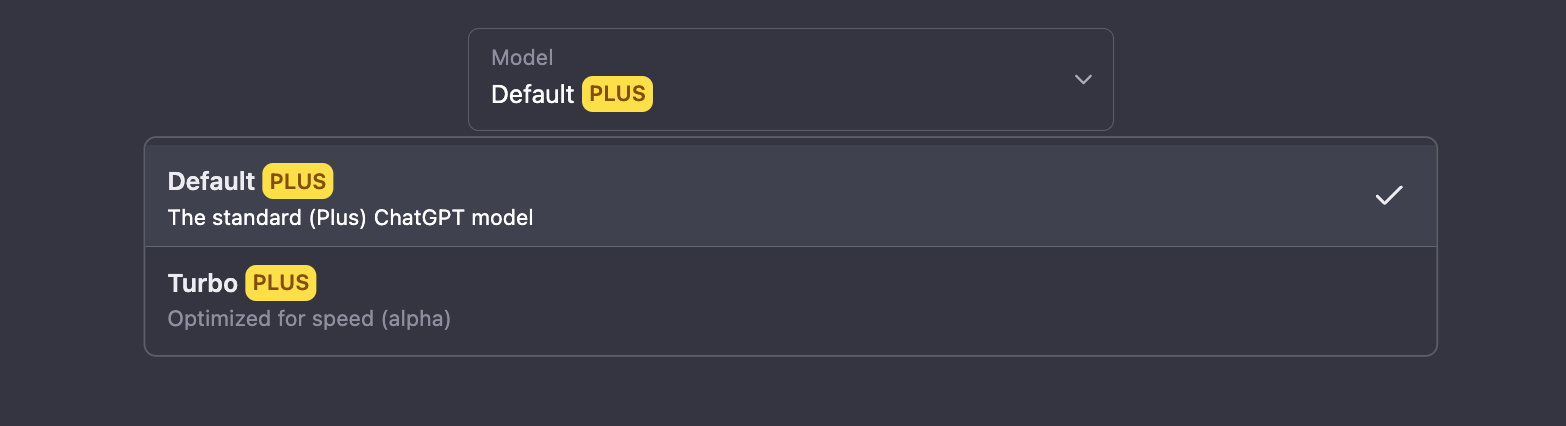

When you open a new chat, there’s a simple drop-down to choose which model you want to use. As of now, once you select the model you’re stuck with that version for that chat; you have to start a new chat if you want to switch models.

The UX is becoming increasingly minimal, at least for ChatGPT Plus.

Clean.