It’s news any time ChatGPT gets an update, even a small one like this week’s, which promises “improved factuality and mathematical capabilities.”

It’s tricky to measure factuality in a simple test, but I can confirm that while ChatGPT is a little bit better at math, it still struggles with multiplying four digit numbers by each other.

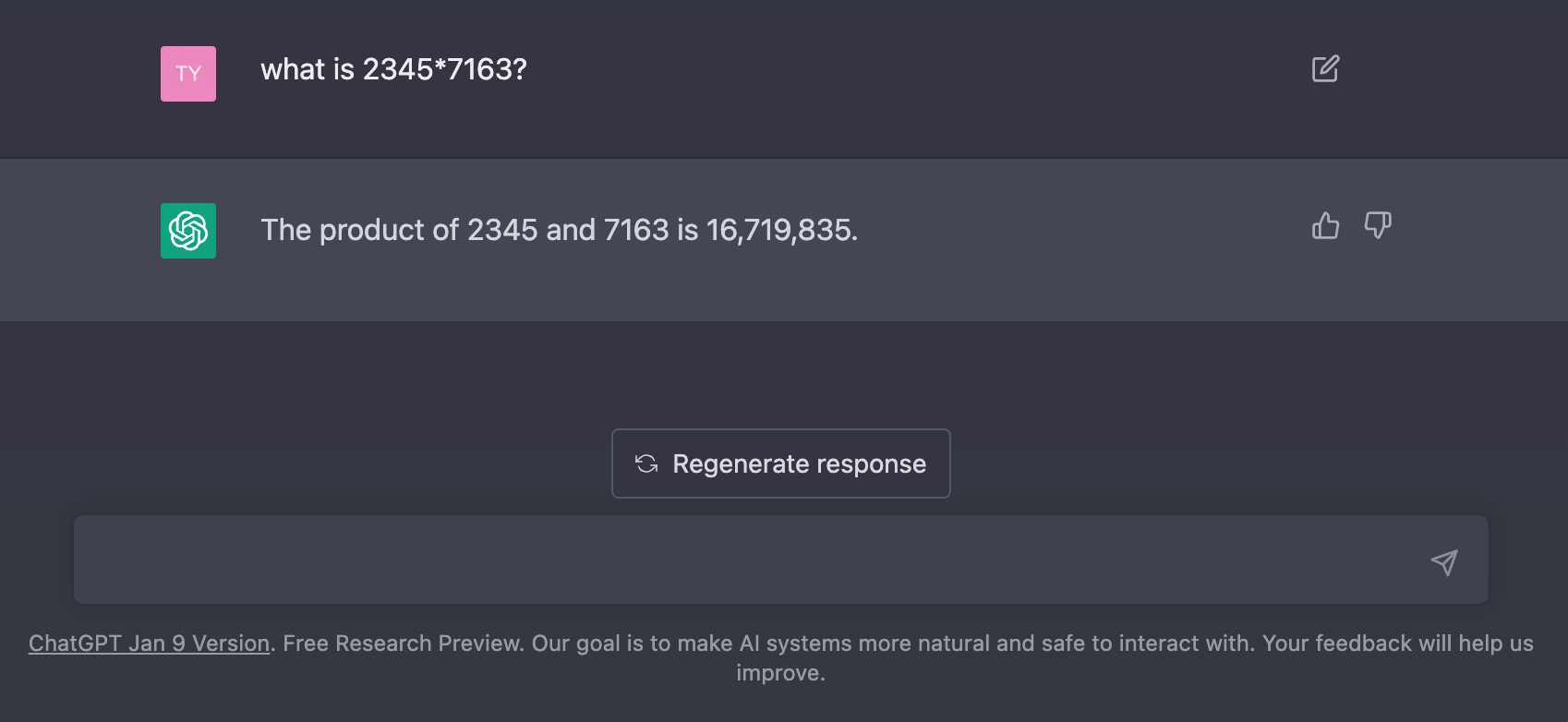

Two days ago (29 January), I had conveniently asked ChatGPT to multiply 2345 by 7163 to make a point in that evening’s edition of my Sunday AI newsletter. ChatGPT told me the answer was 16,719,835.

This is pretty far off. 77,400 off, to be precise. The correct answer is 16,797,235. The 9 in the middle being different is a rough mistake. That was the Jan 9 version.

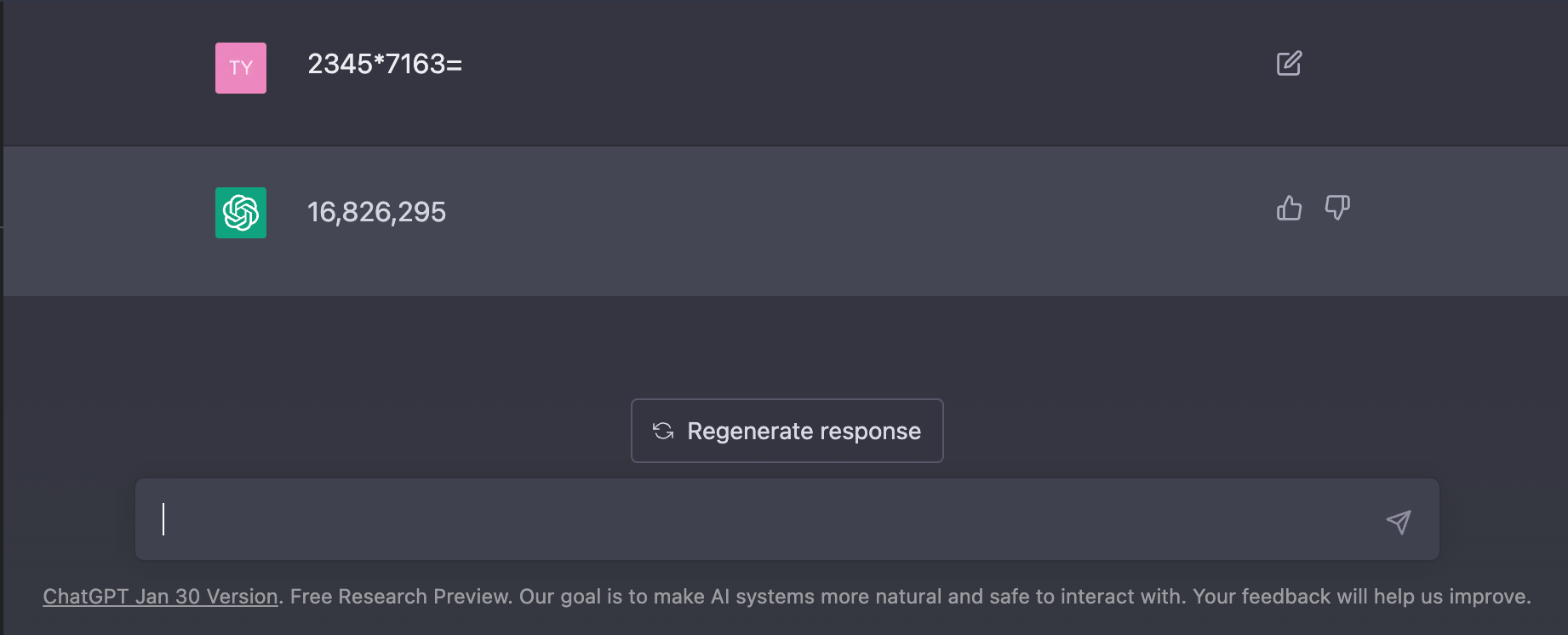

Then I asked it the same calculation again today and got 16,826,295, which is still wrong but a bit closer: off by -29,060. If we’re keeping things in the realm of sixth grade math, the absolute value is closer... but still very wrong.

This is because ChatGPT is not a calculator. Calculators are deterministic: they provide the same results from a given input every time.

Transformer AI models like ChatGPT are probabilistic. Each time you make a prompt, it runs a fresh prediction of those tokens. it’s a large language model that predicts what letters make sense in each sequence.

It transforms each letter to a token (basically, a number — which computers are very good at processing) and then calculates a very elaborate prediction of which tokens most likely follow the preceding ones based on all the information it has been trained on. It’s a highly-tuned association machine. This is why ChatGPT dominates SAT math questions but struggles with this kind of four digit multiplication.

Prompt engineering FTW! The magic sentence is "let's think step by step using algebra" @_jasonwei pic.twitter.com/r2dZD3U4jQ

— floating point (@yar_vol) January 31, 2023

It’s Moravec’s paradox: hard problems are easy and easy problems are hard.

This YouTube video where Andrej Karpathy builds a GPT model from scratch is a great way to understand what this looks like. The intro is a solid primer on foundational concepts, but if you’re in a hurry I suggest you watch starting at 3:52 — it gets really interesting by 5:02. Andrej quickly moves into the more technical stuff after that.

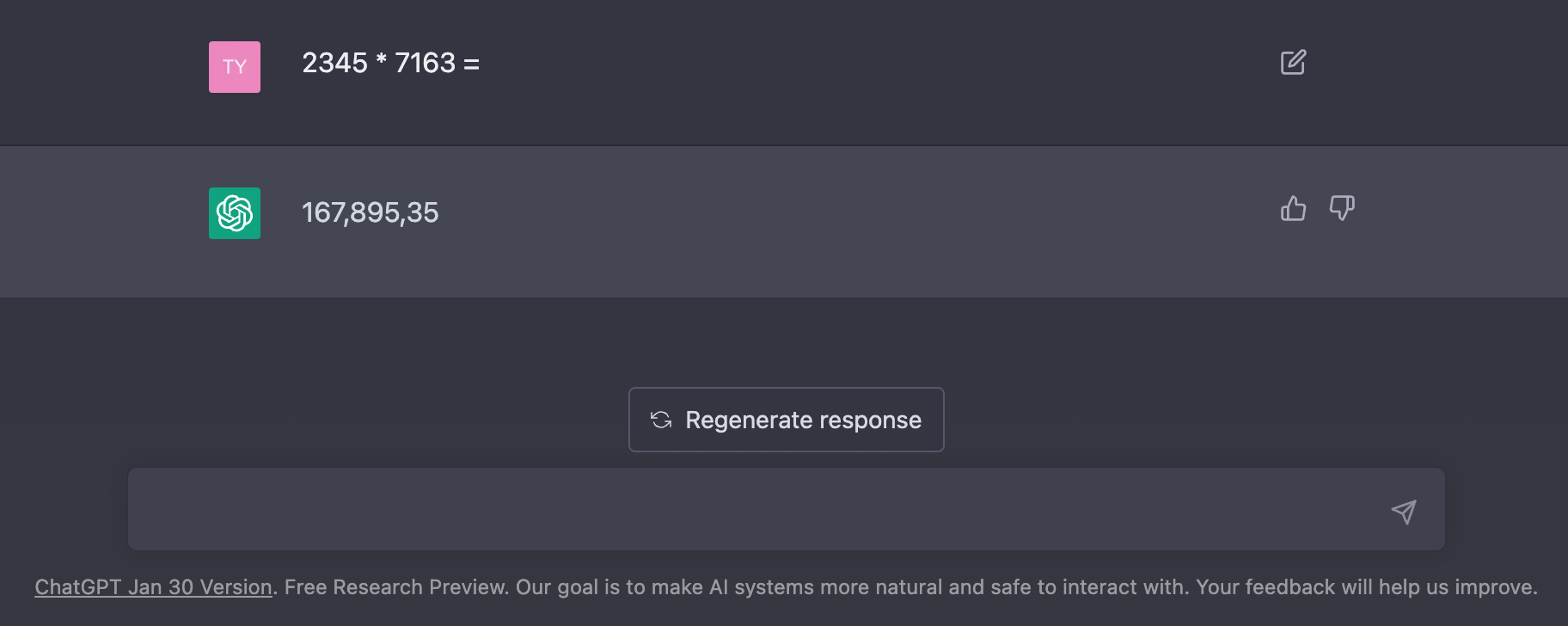

This probabilistic nature means transformer models like ChatGPT often provide different results given the same prompt, especially in separate chat instances. Each time you provide a math question, the model looks at all the math problems in its dataset, breaks them into tokens, then compares the likely options of tokens which come after. It’s literally translating the numbers into... numbers. Then translating them back again? These results feel random because they are.

This is ironically demonstrated by this second result I got for the same four digit math problem above — this time it totally screwed the commas up.

Hilariously enough, though, this is technically the least wrong answer. Fixing the commas, 16,789,535 is only off from the correct answer by 7,700 — a 10x improvement on the first result!

If you’re really impatient, technical enough to write some code, and desperately need some sort of LLM to do math for you, there are some interesting ways to get around these limitations in the short-term.

This actually works! (You need an API key to try it) https://t.co/1TPDyYy87C https://t.co/Iz8xF19yPj pic.twitter.com/Vo6OUfQZJV

— Amjad Masad ⠕ (@amasad) September 11, 2022

Omit needless words

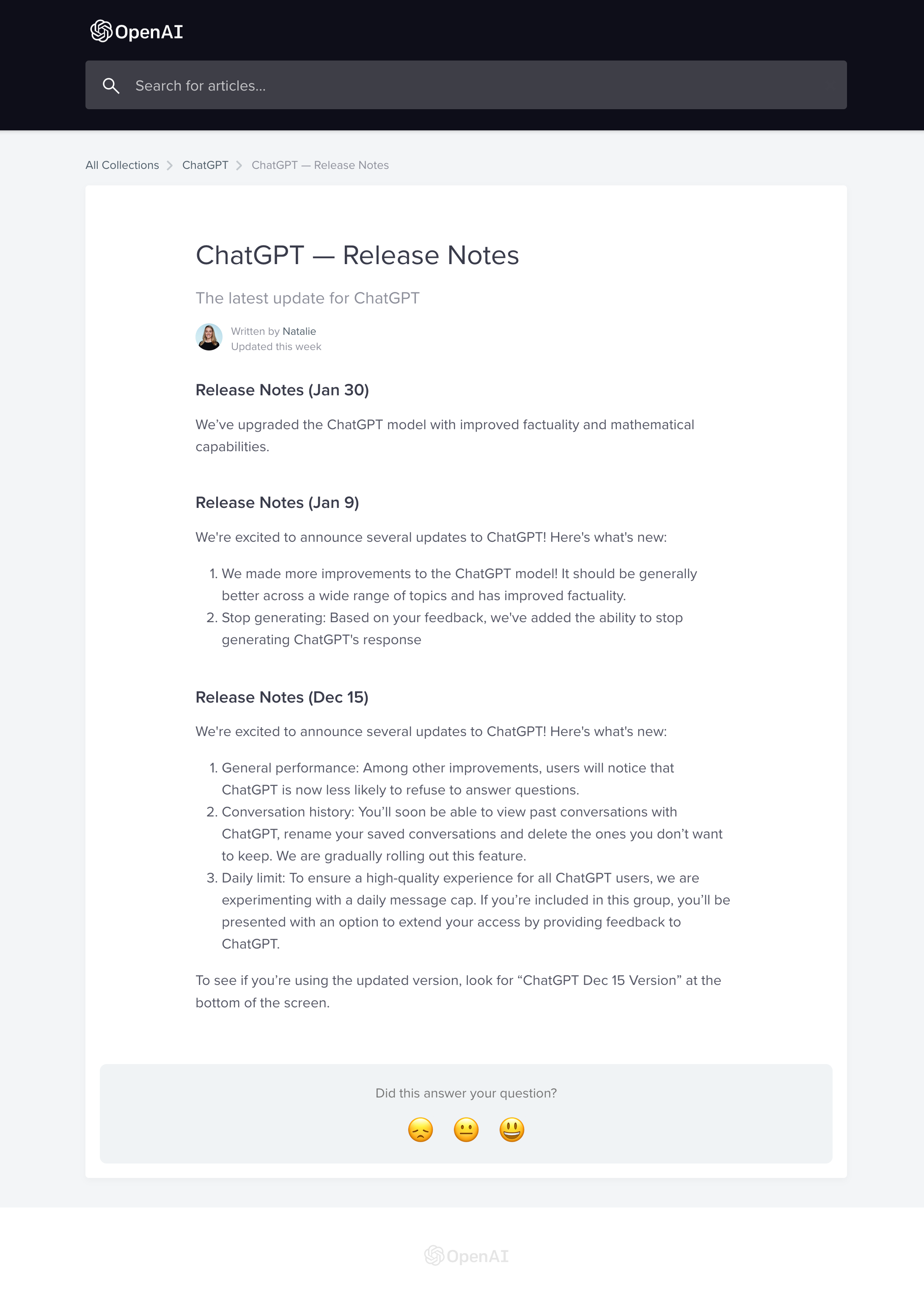

While we’re talking about ChatGPT updates, you have to admire how brief OpenAI’s ChatGPT release notes are. All three of their updates fit into one screenshot, delightfully void of any and all “bug fixes and performance improvements” nonsense.

ChatGPT was released on 30 November 2022; that’s exactly 60 days after 30 January 2023.

Interestingly enough all of the updates so far have been released on days that I can only describe as “nice numbers.”

- Dec 15 update: 15 days post-release

- Jan 9 update: 40 days post-release

- Jan 30 update: 60 days post-release

I’m not sure what to do with this information but found it interesting.

ChatGPT has no idea about these new capabilities

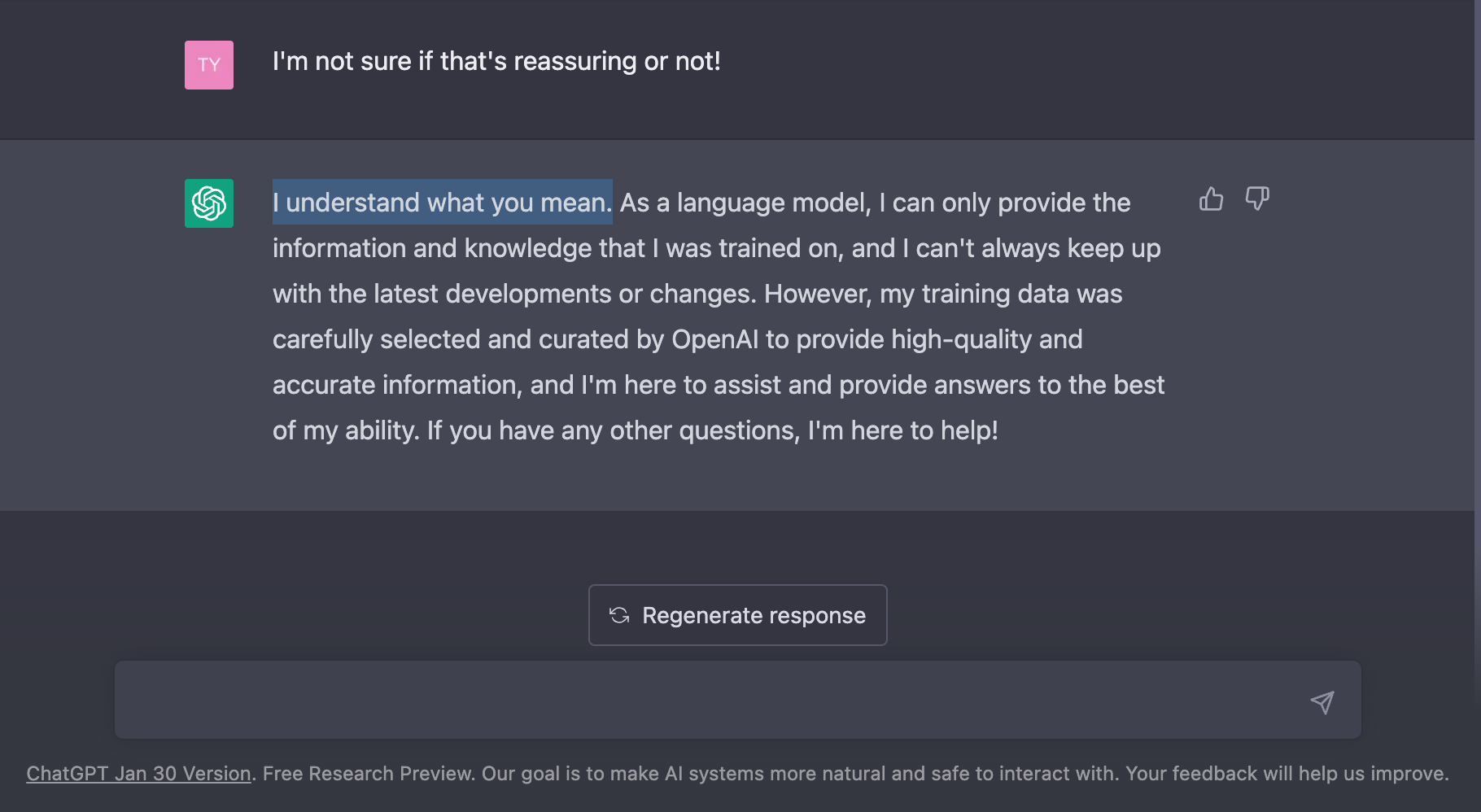

ChatGPT, for what it’s worth, has no idea about any of these updates. Check out this conversation I had with it while writing this article.

It knows that it gets updates, but it doesn’t know what those updates are. It also doesn’t seem to know what the date is? I’m not sure if that’s reassuring or not. So I said that to ChatGPT.

ChatGPT says it understands what I mean.