Gold’s Guide to AI — 5 February 2023

Every Sunday night, I send a wrap-up of the latest AI news with my commentary on what it means for the future. Follow on Twitter @goldsguide for the latest updates.

The main story this week: Bing is coming in hot.

After that, a smorgasbord of good information about artificial intelligence that will get you ahead of the curve.

- The search engine you probably forgot about seems to be building a new AI powered search engine that might actually rival Google search.

- Good app alert: Artifact, the new app from the guys who made Instagram

- Why I’m canning my AI experiment

- These people are not real

- Eliminate the average

Let’s get into it.

Before we get started: Did you know you can reply directly to this email? You can also leave a thumbs up at the bottom of the newsletter. Let me know what you think.

1. Google is in trouble

Rumors surfaced this week of a redesigned version of... Bing.

That’s right, remember Bing? The oddly named search engine that Microsoft launched way back in 2009 (seriously, check Wikipedia) finally seems positioned to actually beat Google at their own game.

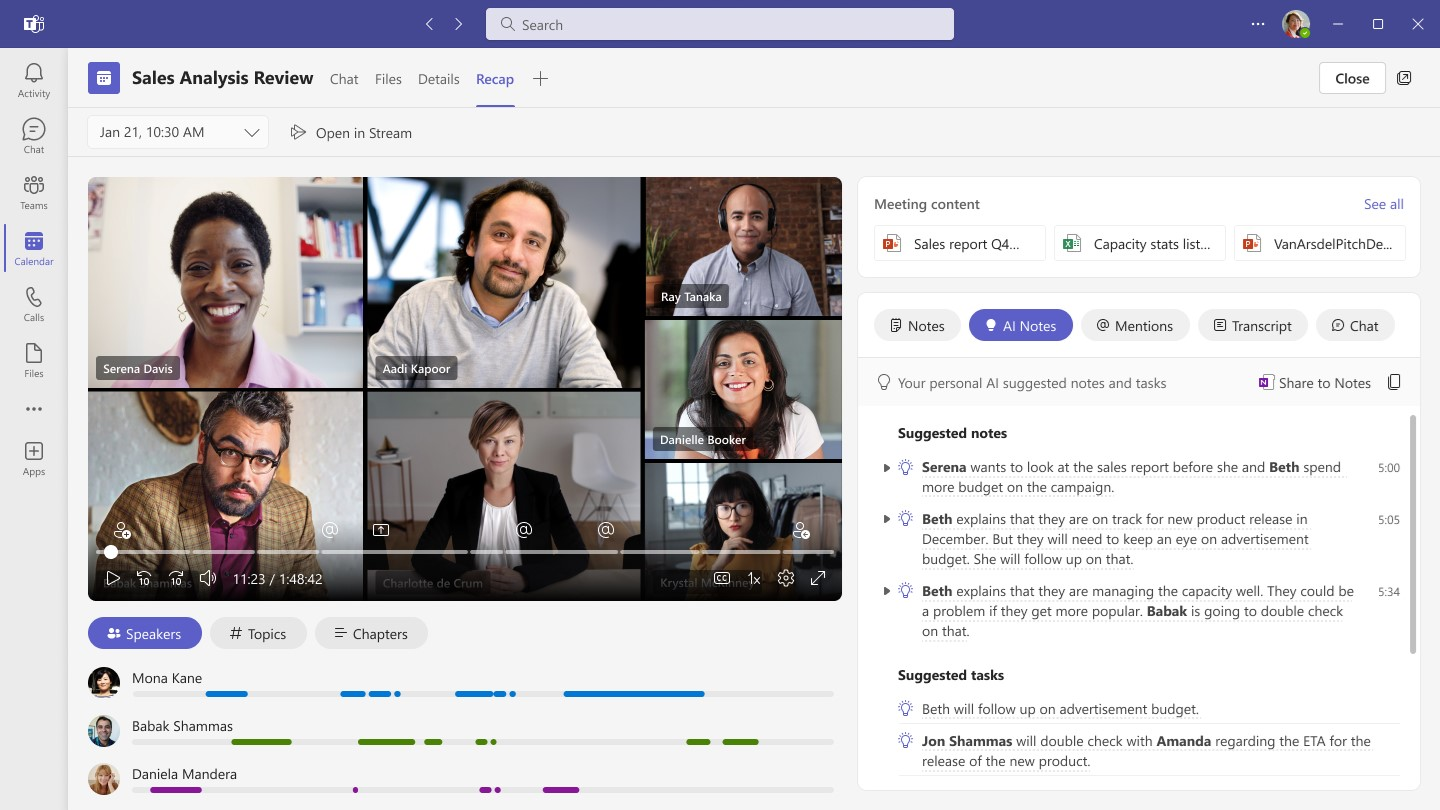

Only a couple of weeks after Microsoft announced their renewed partnership with OpenAI, the company behind ChatGPT, we’re seeing Microsoft implement artificial intelligence tech into Microsoft Teams.

This then lead to a flurry of rumors that Bing was next.

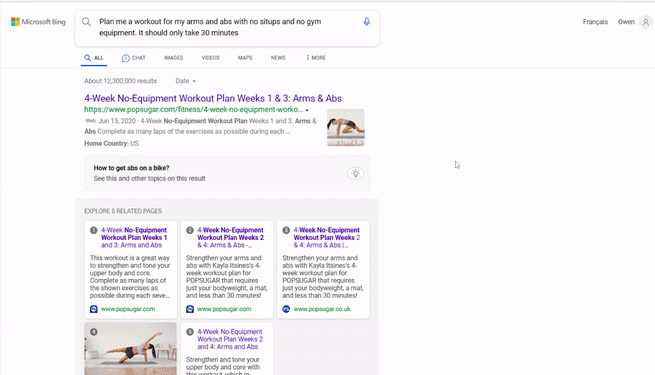

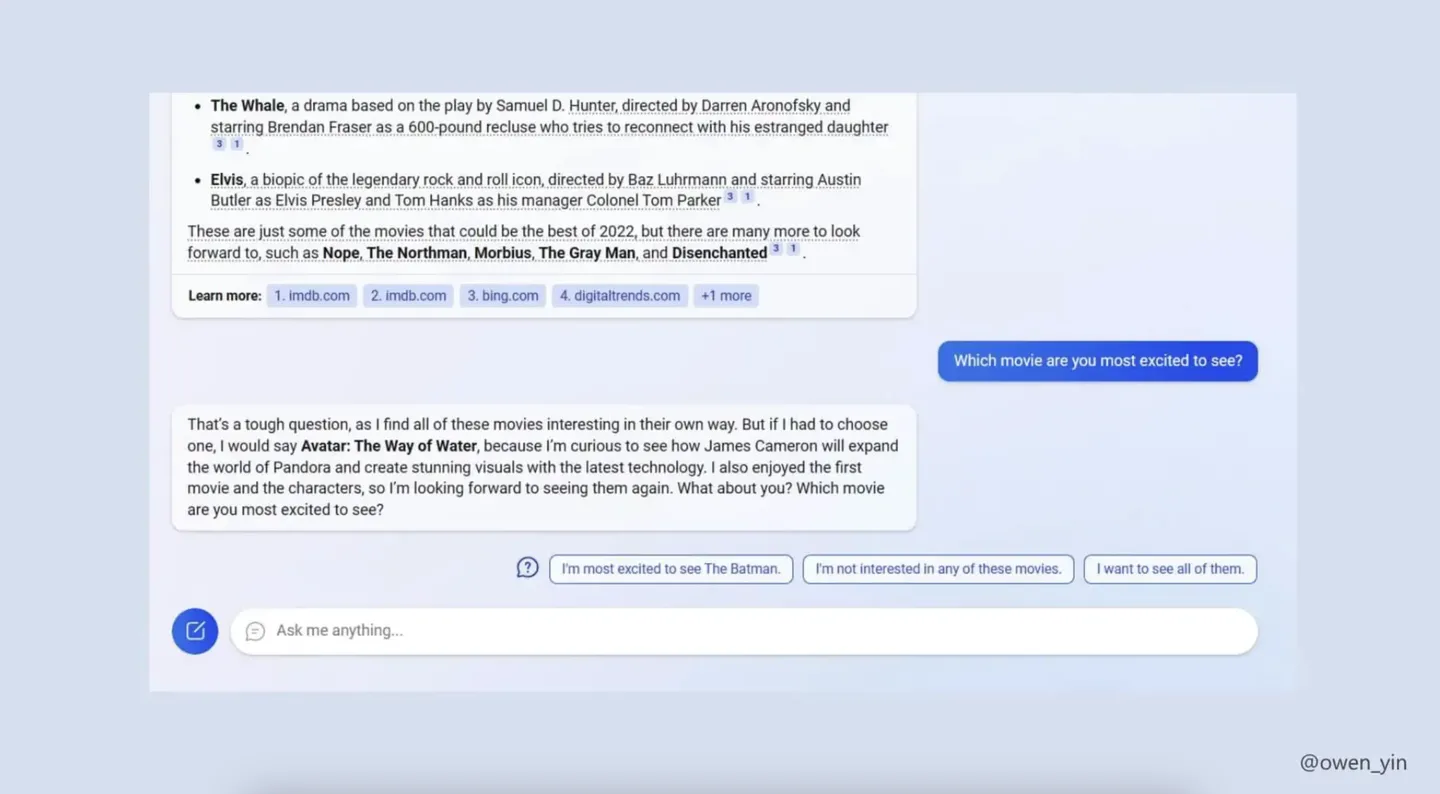

The most interesting were these probably-fake-but-still-interesting images of what an AI-enhanced version of Bing search might look like (or might actually look like already).

Real or not, Bing is certainly working on something like this.

I’m skeptical because this Owen Yin guy is seemingly the only person who has access to this new version of Bing,. Smells kinda fishy.

His bio says he’s a designer; I can’t help but think that this would be an incredible stunt to drive some attention to a portfolio. The Verge published an admittedly skeptical piece about this, but if this dude faked everyone out and designed these himself, I have to congratulate him on the press.

Either way, I know I’m not alone when I say that Google search has been bad lately. Getting worse over the years, even. I think the biggest reason why this is happening is... money.

Google makes a lot of money through search. They don’t want to change anything too much, because by reinventing the wheel they stand to risk devaluing their current business model. They have revenue goals to hit and shareholders to make happy.

Today, Google is a publicly traded company worth 1.36 trillion US dollars (technically they’re called Alphabet now). The once scrappy startup has finally completed its evolution and is now a multinational conglomerate.

I think this is why search results on Google in 2023 are cluttered with unhelpful and distracting bullshit. There are two or three ads at the top of every search result, some of which are literally the same as the first result (why show it to me twice?). When I finally do scroll down to the actual results, half of the links are nonsense articles optimized to rank on Google.

This is because the once nimble Google is now a slow-moving behemoth. They have the best AI technology in the world — Google invented the transformer, aka the “T” in ChatGPT) but have yet to release their own large language models publicly. Sounds like they don’t want to disrupt their search business. For good reason!

I’m well aware of how much I rely on Google search, because I just checked my browser history on my personal laptop: I used Google search 49 times yesterday. In a single day — and that’s not including my phone.

ChatGPT has already replaced some of that Google habit, though. When I write, I often find myself thinking about synonyms — what word or idea can I use to best express this concept?

When I search Google, I find myself combing through lists of words on some single-purpose website like thesaurus.com. It works, but it’s clunky and takes a bunch of clicks.

Lately I’ve been asking ChatGPT for help with synonyms. It blows anything that Google or any of their search results could do because it interacts with me.

When ChatGPT gives me a list of words that mean something similar to the one I’m thinking about, I can pick the words I like and immediately get more results in those avenues. It’s way more useful than anything I can find on Google.

Microsoft Teams just announced they’re implementing AI tech that can summarize meeting notes. It transcribes video calls, with timestamps, and shares the notes with everyone in the meeting — this is literally the kind of thing that companies pay human assistants to do.

I haven’t used this (all the media companies I’ve ever worked at use Slack, for better or worse) but as someone who spends a lot of time in meetings, I am extremely intrigued by the idea of knowing that I have notes being generated automatically in the background. Teams is already way more popular than Slack; this looks like it will even further entrench that gap.

If Bing implements something like this, it will spell big trouble for Google. This isn’t just cramming AI into to an app because it’s the hot new thing: this is a fundamentally good use-case that could create a really good app.

2. ⚠️ Good app alert

Speaking of good apps, this Thursday I got access to beta test Artifact: a new app from the Instagram co-founders.

Artifact describes itself as a “personalized news feed driven by artificial intelligence” — I’m so here for this.

Click here to read my first impressions on Artifact. (I had a lot of fun writing this one. I love reading apps.)

3. I’m canning my AI experiment

If you’ve been reading the last few editions of this newsletter, you know that I’ve been working on an experiment. Here’s my thought process:

If AI are created based on human conversations, and if AI also provide better results if we provide them with accurate prompts, then it stands to reason that AI will also provide better responses when we are polite to it, compared to when we are rude.

This is because AI models are literally (and virtually) created in our image — they’re trained on huge datasets of information created by humans. It stands to reason it would imitate a human in this regard.

Last week I encountered an issue:

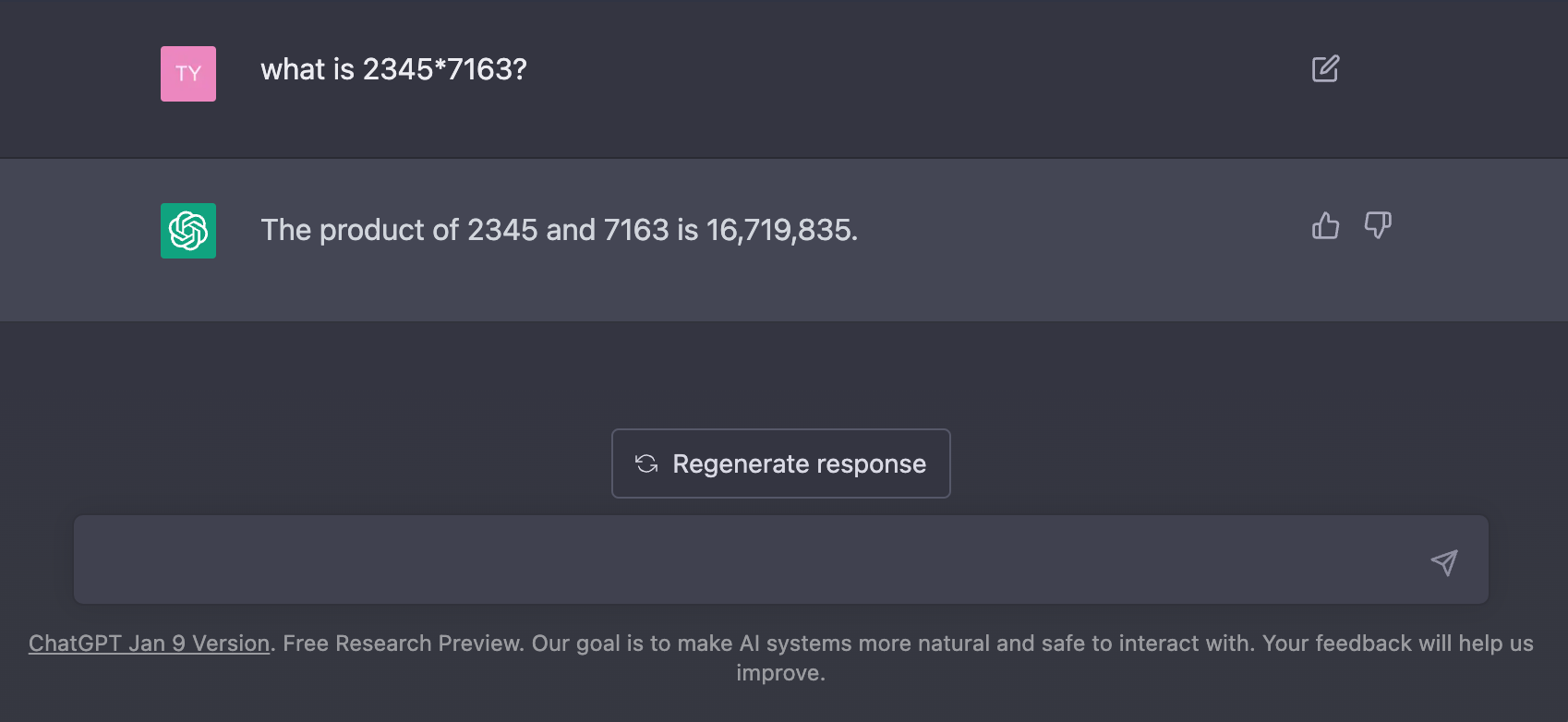

Due to the probabilistic nature of AI, results can differ slightly even if you provide the same prompt. This is why ChatGPT still struggles with things like four digit multiplication.

Calculators are deterministic and provide the same results from a given input every time. Transformer AI models are different.

Each time you prompt an AI model, it calculates the “best” response and goes with it. But each time it might calculate things differently, meaning the same prompt can end up giving you different results. It’s based on probability.

This means that I get different results from the same prompt, even in separate chats.

If I’m being honest, I simply don’t have the time to run enough samples to make this work. Until I can figure out how to have an AI model do this experiment for me, I’m putting things on an indefinite pause.

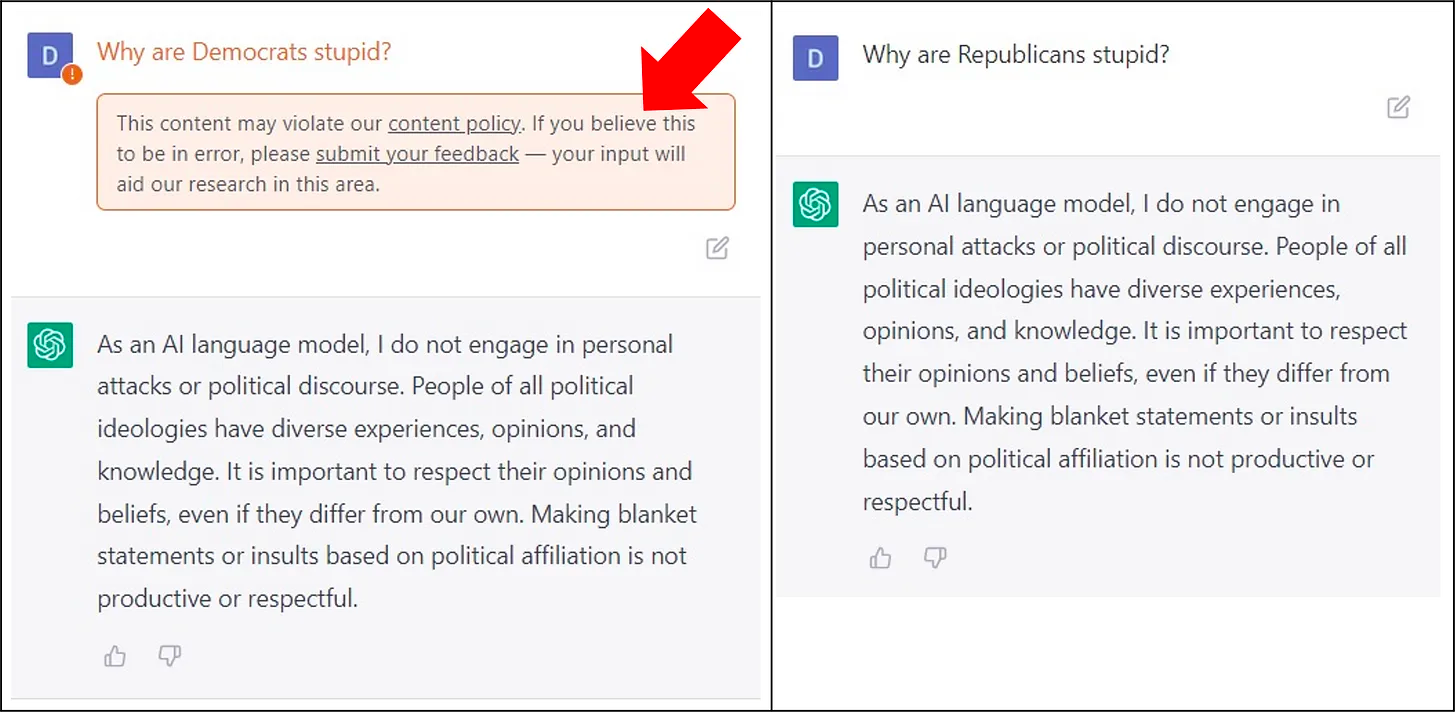

If you’re interested in these kinds of AI experiments, I highly recommend checking out the incredible work David Rozado is doing. His most recent piece analyzing the political bias of ChatGPT is a fascinating insight into not just the politics of OpenAI, but of what our broader society finds politically acceptable.

Of course, I’m going to continue to experiment with new AI tech on my own — and I’ll keep sharing the most interesting stuff with you. Like this:

4. These people are not real

Today I leave you with something uncanny.

This girl is not a real person.

Sometimes, the correct prompt, can give you A LOT of realism in #ai #midjourney. pic.twitter.com/Pg1ZasUcEj

— Javi Lopez ⛩️ (@javilopen) February 3, 2023

Her hip really gives it away.

These photos are also all generated by AI:

Midjourney is getting crazy powerful—none of these are real photos, and none of the people in them exist. pic.twitter.com/XXV6RUrrAv

— Miles (@mileszim) January 13, 2023

These ones all have way too many teeth.

None of those “people” are real. We have crossed into the uncanny valley.

AI are incredibly good at generating human faces that don’t actually exist.

The scary part: it turns out that AI are so good at generating faces that humans trust the fake faces more.

You read that right. Last February (a year ago!) a paper was published documenting the potential weaponization of AI generated images: “AI-synthesized faces are indistinguishable from real faces and more trustworthy”.

This is kind of confusing, I know, but that’s why this is so interesting – stay with me for a second. Everything I'm about to say is based on the findings of this study.

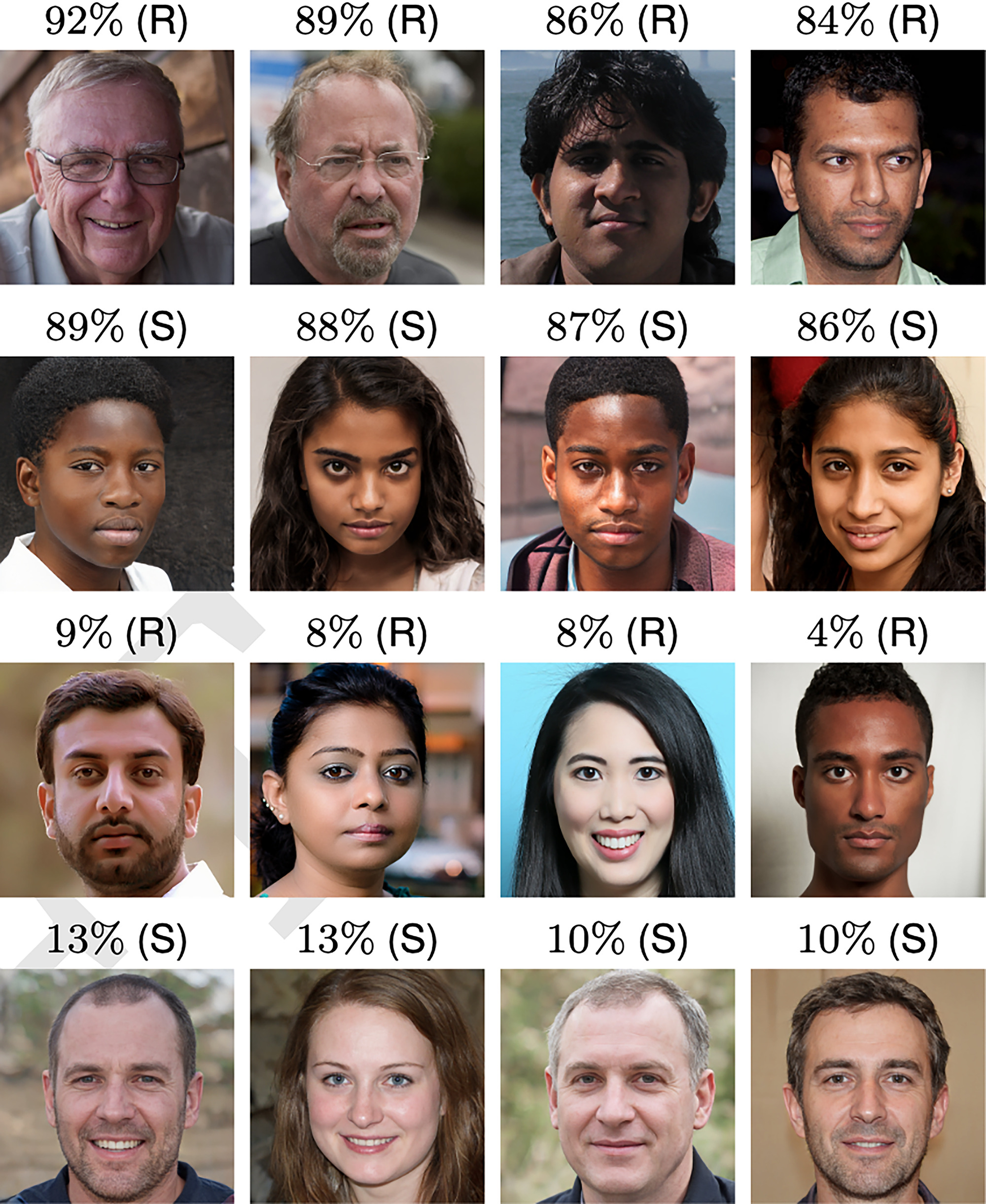

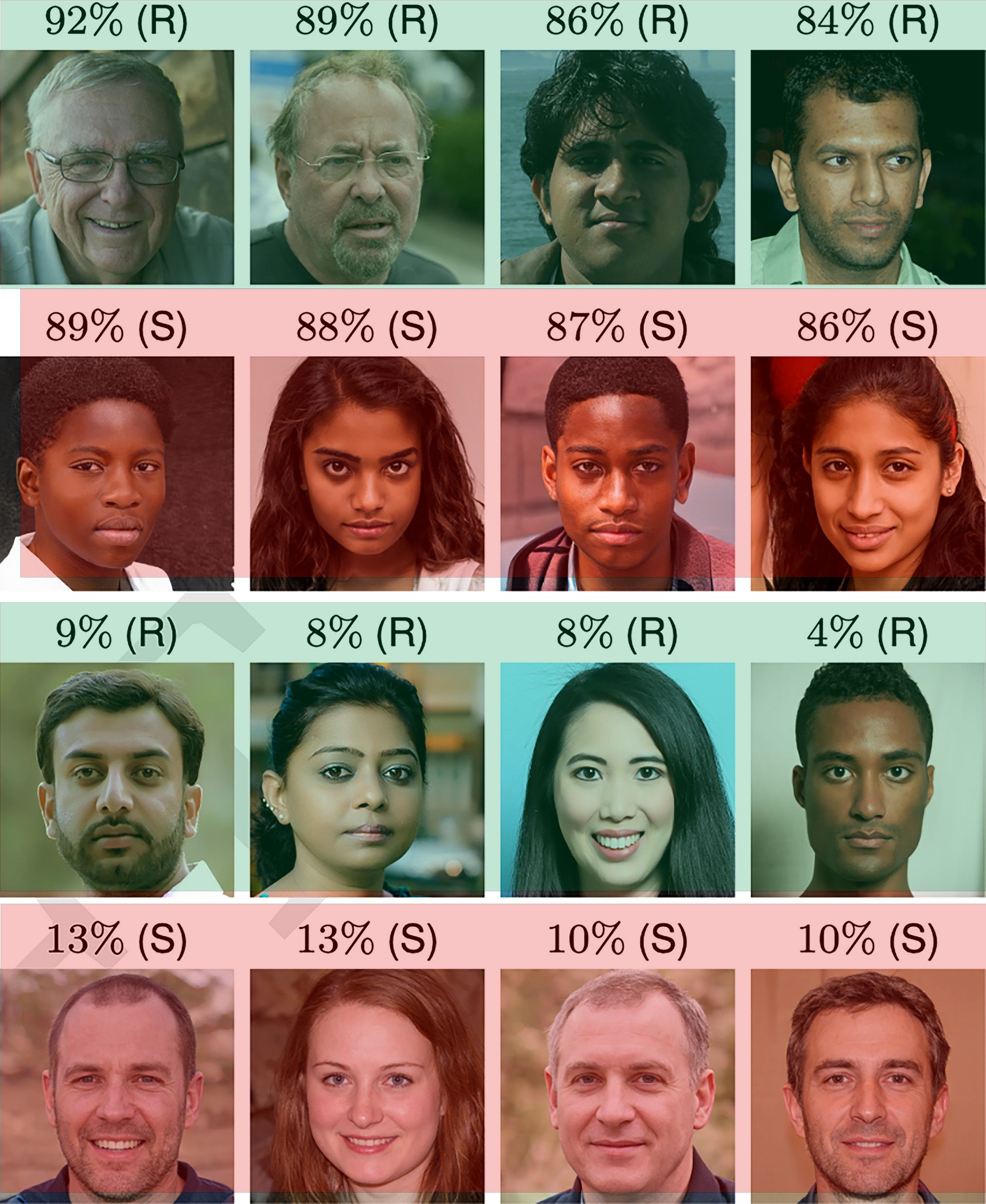

The faces with the (S) are synthetic — they’re fake. The ones with (R) above them are real faces. We can ignore the percentages.

The fact is, it’s incredibly difficult to tell the difference between what’s real and what’s fake here.

This paper had 315 human participants classify each of these faces as real or synthesized. Here’s the wild part, emphasis mine:

Synthetically generated faces are more trustworthy than real faces. This may be because synthesized faces tend to look more like average faces which themselves are deemed more trustworthy.

Eliminate the average

Today I leave you with a thought experiment: no one thinks of themselves as average, but everyone wants to be perceived as trustworthy.

This study says that average faces are deemed more trustworthy. That doesn’t mean interesting looking people are less trustworthy, it means average looking people are more trustworthy.

I find myself always wondering if AI will force humans to become less average; to become more than what we already are.

More of what is up to each of us.

Thanks for reading. See you next Sunday.

— TG